Read next

The Story of Resilience, Part 3

Understanding Self-Adaptive Systems

What are the basic principles of self-adaptive systems? This is the basic prerequisite for bringing the concept of resilience to life. Part 3 of our series.

© iStock/lindsay imagery

The Story of Resilience

Did you miss the previous blog articles in this series? Read it here

The 19th century American psychologist William James coined the metaphor of our world being a “blooming buzzing confusion” [1]. As a metaphor it accurately describes how cognitive systems perceive our world. The world is full of unexpected surprises and is permanently changing. In his book “Conscious mind, resonant brain” [2], Stephen Grossberg therefore points out that “Our brains are designed to autonomously adapt to a changing world.” [2]. And that’s how we must design cognitive systems, too.

While it would be presumptuous to think that we could even come close to imitating this ability of the human brain, it is a pivotal inspiration for how to engineer resilient systems. The system’s ability to continuously adapt to unexpected situations and to changing contexts is a key for cognitive systems such as driverless cars to eventually succeed.

We define resilience as “optimizing utility whilst preserving safety in uncertain contexts”. If we split that definition into two parts, the first part is “optimizing utility in uncertain contexts”. That is in fact pretty much the idea of a self-adaptive system, which is defined as “a system that can handle changes and uncertainties in its environment, the system itself, and its goals autonomously” [3]. The real challenge, however, lies in the second part of the definition, namely “whilst preserving safety”, which requires no less than a paradigm shift in safety assurance.

In this third part of the series, I’ll therefore give a brief introduction to the basic principles of self-adaptive systems before the next part will conclude the series by adding adaptive safety management as the magic ingredient to come from self-adaptivity to resilience.

The architecture of Self-Adaptive Systems

We have to acknowledge that we cannot predict a system’s context and that we don’t have all necessary data available at design time. There are just too many uncertainties and the system’s environment is too dynamic. In fact, there is simply no other way than enabling systems to resolve uncertainties at runtime and to adapt themselves in order to optimize their utility dynamically. Hence, it is not surprising that many systems are in some way self-adaptive already today.

However, the adaptation behavior is usually implemented implicitly as an indistinguishable part of the functionality. Often, one only finds some flags at the code level used in a complex, nested phalanx of if-then-else-statements. But if we know one thing after twenty years of research on self-adaptive systems: Such hard-coded adaptation mechanisms won’t work. Why should one think that hard-coded rules that are scattered throughout the code would be flexible enough to handle the uncertainty? Instead, “hard-coded behaviors will give way to behaviors expressed as high-level objectives such as ‘optimize this utility function’” [4]. And what should give one reason to believe that we could master the quality of a system, in which an implicit if-then-else-style adaptivity leads to far more possible system configurations than we have atoms in our universe? And without a complex reverse engineering effort, one would not even know the system’s configuration space.

© Fraunhofer IKS

Prof. Dr.-Ing. habil. Mario Trapp: "We have to acknowledge that we cannot predict a system’s context and that we don’t have all necessary data available at design time."

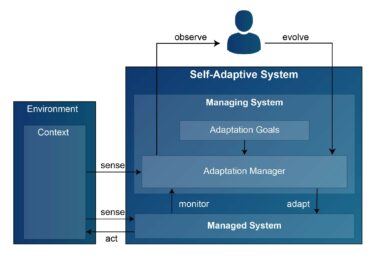

Therefore, there is one major design principle as shown in Figure 1: A self-adaptive system always separates its actual functionality from its adaptation behavior into a managed system and a managing system, respectively. Otherwise, you will neither yield the necessary flexibility, nor, and even more importantly, will you be able to master quality assurance and thus safety.

Moreover, Figure 1 shows a further important aspect of self-adaptive systems. We have to acknowledge that also a self-adaptive system will not be able to resolve any situation – at least not in an optimal way. There will always be unknown unknowns that the system will not be able to handle autonomously. That’s why we need an additional macro-cycle, which includes a human-in-the-loop. Whenever the system fails to find an optimized adaptation (or any solution at all), or even as a permanent improvement cycle, we can gather information about the adaptation performance and feed it back to engineers, who then evolve the adaptation goals and the adaptation manager.

This fits pretty much to the popular idea of DevOps or continuous deployment or however you would like to call it. But it has two important advantages: First, an adaptive system already tries to adapt itself as autonomously as possible thus reducing the effort for human engineers. Second, if it fails, it can tell the engineers at a high semantic level, in which situation it failed due to which reasons, which enables the engineers to understand the issue much quicker and much more deeply compared to just offering a data dump such as a video stream of the camera-based object detection that failed. Hence, engineers can provide better improvements in less time, at less cost, at higher quality.

© Fraunhofer IKS

Figure 1: Basic Architecture of self-adaptive systems based upon the concepts introduced in [3].

The adaptation management cycle

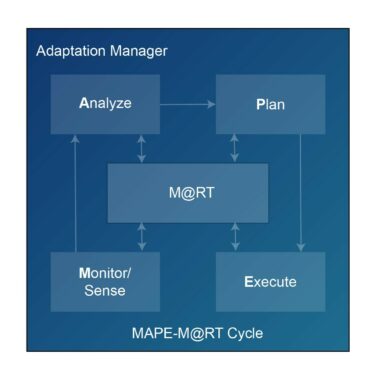

Nonetheless, the primary goal of a self-adaptive system is adapting autonomously to as many situations as possible, i.e., without human interaction. To this end, the adaptation manager realizes a kind of a closed-loop control as shown in Figure 2: the manager monitors the current state of the managed system and senses its external context in order to analyze if it is necessary/reasonable to adapt the managed system and to derive possible configurations. If the managing system concludes that it is reasonable to adapt the managed system in order to (better) fulfill its adaptation goals, it plans the adaptation and finally executes it. By continuously monitoring the managed system, the adaptation manager can adjust the adaptation and even learn how to better adapt the managed system.

All of these steps require a formalized knowledge that enables the system to become aware of its goals, its context and its own state and to reason about possible ways to optimize its goal achievement in the given situation. This way of realizing the adaptation management is referred to as a MAPE-K cycle [4]. This approach helps us to shift activities that used to be performed by engineers at design time into runtime to be performed by the system itself. As the managing system optimizes the managed system at runtime, it can resolve many uncertainties based on data collected at runtime and instead of finding a general solution for any thinkable or unthinkable context, it is sufficient to find an appropriate solution for the given situation – one at a time.

Self-Adaptivity is the foundation of any resilient system.

Executive Director of the Fraunhofer Institute for Cognitive Systems IKS.

This type of optimization is comparable to the optimizations that we as engineers used to do at design time. Hence, it seems reasonable to define the required knowledge based on similar concepts as the design models that we use as engineers. That idea of expanding concepts of model-based design into runtime is called models@run.time (M@RT), which are defined as “a causally connected self-representation of the associated system that emphasizes the structure, behavior, or goals of the system from a problem space perspective” [5]. That means, we enable the system to reason about its context, its own state etc. in terms of requirements, architecture, context models etc., i.e., in the same way as we as engineers would do it.

To this end, the original idea of the MAPE-K cycle was refined to a MAPE-M@RT-cycle [6] by using models@run.time as an appropriate representation of knowledge. Particularly for resilience this is an important ingredient, as we will see in the next part of this series, as this enables us to use “safety models @ run.time (SM@RT)” so that the system reasons about safety at runtime based on models such as safety cases, safety concepts, or safety analyses. This means that it uses the same principles and concepts as a safety engineer to conclude on its safety. In combination with the deterministic and traceable nature of such models, we get an ideal foundation for assuring safety in spite of dynamic adaptation.

© FRaunhofer IKS

Figure 2: Adaptation as a closed-loop control using a MAPE-K [4] / MAPE-M@RT[6] cycle

The concept of mode-management

In principle, the adaptation manager could adapt the managed system in arbitrary, highly flexible ways. Particularly for safety-relevant systems, however, we usually restrict a system’s adaptivity to reconfiguration. That means that the system’s components might have different modes such as a path planning component having different alternative path planning algorithms, each of which with a set of parameters such as maximum speed, minimum distance etc. Or as a related alternative we might consider a robot using a behavior tree with variation points and parameters that are resolved at runtime to create the actual behavior step by step dynamically.

In any way, we somehow restrict the adaptivity to resolving predefined variation points at runtime. While we do not have enough information available at design time to resolve all variation points – and often there is no one-variant-fits-all solution anyway – we have all the necessary information available at runtime so that the managing system can resolve the inherent variability in such a way that we optimize the managed system’s utility in a given context.

Even though this might seem rather restrictive, this opens already a huge configuration space allowing very fine-grained adaptations. And of course, as always, there is an engineering trade-off – in this case between flexibility on the one hand and complexity and thus cost on the other hand. Some of you might now object that mode-management does not sound very different to the if-then-else-style. However, there are significant differences: First, in mode-management we “only” define which modes and parameters are allowed under which constraints. And the models provide all the information that the system needs to derive the influence of activating a specific mode on the managed system’s utility (and safety). Thus, these definitions span the valid adaptation space.

Within the mode definitions, however, we do not define rules when to choose which modes with which parameter values. This task is part of the adaptation manager and is realized in a flexible way by optimizing utility at runtime. This leads to a much higher flexibility than hard-coded rules (a) as it searches system-level optima within the adaptation space at runtime, (b) as it can learn over time and (c) as engineers can evolve the adaptation behavior at a central point of the architecture. Second, this provides us with an explicit definition of the adaptation space. Hence, we can assure that the system will not take an inconsistent configuration and not run into life-locks or deadlocks to name but a few possible quality issues. Third, it is always a design goal to reduce the adaptation space to those configurations that have the highest influence on utility as any additional configuration means additional complexity, additional implementation effort, and additional quality assurance effort. Any support for getting rid of unnecessary configurations right from the beginning is therefore an invaluable asset and a key to success.

Summary

Self-Adaptivity is the foundation of any resilient system. And there are four basic principles that you should consider whenever you develop an adaptive system: First, in order to get the necessary flexibility whilst at the same time having a chance to master the resulting complexity, it is crucial to use an architecture that separates the domain functionality in a managed system from the adaptation behavior in a managing system. Second, it is reasonable to realize the adaptation management as a closed-loop control. To this end, I would recommend to use models at runtime (M@RT) as a knowledge base enabling the systems to reason about their context, their own state, their goal achievement, and thus to adapt and optimize themselves. Third, it is often completely sufficient and even recommendable to reduce the adaptivity problem to a mode-management problem, which still provides sufficient flexibility whilst at the same time providing a sound basis for quality assurance. Fourth, we have to acknowledge, that also adaptive systems have restrictions and will encounter situations that they can’t handle. Therefore, it is important to design an architecture that includes an evolution-loop with a human-in-the-loop right from the beginning.

References

[1] W. James, The principles of psychology. 1890.

[2] S. Grossberg, Conscious mind, resonant brain: How each brain makes a mind. Oxford University Press, 2021.

[3] D. Weyns, An introduction to self-adaptive systems: A contemporary software engineering perspective. Wiley, 2020.

[4] J. O. Kephart und D. M. Chess, „The vision of autonomic computing“, Computer, Bd. 36, Nr. 1, S. 41–50, 2003, doi: 10.1109/mc.2003.1160055.

[5] G. Blair, N. Bencomo, und R. B. France, „Models@ run.time“, Computer, Bd. 42, Nr. 10, S. 22–27, 2009, doi: 10.1109/mc.2009.326.

[6] B. H. Cheng u. a., „Using models at runtime to address assurance for self-adaptive systems“, in Models@ run. time, Springer, 2014, S. 101–136.

Read the fourth part here: The Story of Resilience, Part 4 – Adaptive Safety Control – why it's the icing on the cake