Read next

The Story of Resilience, Part 4

Adaptive Safety Control – why it's the icing on the cake

Safety is a property of a system and its environment. That's why predicting a system's context is so important to safety engineers. However, we must acknowledge that it is impossible to sufficiently predict and specify a system's context when it must operate outside of a controlled environment. In the previous parts of this series, we've seen how we can use self-adaptivity to build systems for uncertain, dynamic contexts. In this fourth and final part, we add the missing ingredient by taking safety engineering to the next level.

© iStock/lindsay imagery

The Story of Resilience

Did you miss the previous blog articles in this series? Read it here

We define resilience as "optimizing utility whilst preserving safety in uncertain contexts". With self-adaptive architectures, we already have a solid foundation for "optimizing utility in uncertain contexts". However, the key challenge lies in the small subclause " whilst preserving safety". To solve this challenge, we must sacrifice a sacred paradigm: the safety control of intelligent functionality requires intelligent safety mechanisms. In other words, functional intelligence requires safety intelligence. The latter (as far as the scope of this blog series is concerned) means the ability to adapt to uncertain contexts in order to operate in an open, dynamic environment. Here we go - welcome to adaptive safety control.

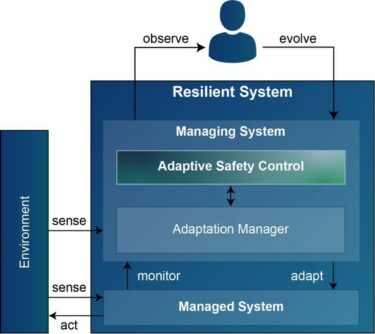

Adaptive Safety Control is no magic. As shown in Figure 1, we start from the architecture of a self-adaptive system as we introduced it in the previous part of this series. While the adaptation manager is responsible for the utility-focused adaptivity of the system, we additionally add the adaptive safety control that ensures that the residual risk of the managed system is always lower than the acceptable risk. In fact, it is not always necessary or reasonable to have it as a separate element in practice, but separation of concerns is also in this case recommendable.

Figure 1: Extending a self-adaptive system (based on [1]) with Adaptive Safety Control

to yield a resilient architecture

As we all know, risk depends on the context. It depends on exposure, controllability, and severity which may be completely different in different situations. Moreover, the mechanisms used to control safety might be different in different situations. In conventional safety engineering, we consider failures to happen in different situations, assess the risk all of the resulting hazardous events, and the worst case then determines the rigor, i.e., the integrity level that is required to mitigate the causing failure. At runtime, however, we do not need to consider all possible or impossible operational situations, but we focus on the current situation. We can consider the risk of the current situation, adapt the safety concept to sufficiently mitigate those risks in the given situation and eventually to adapt the system architecture to implement that safety concept accordingly. Considering the concrete case instead of a worst case opens up the freedom that we need to optimize utility without violating safety.

Be SM@RT - Safety-Models at Runtime

In order to have any chance of assuring such an approach, this way of runtime reasoning must be based upon safety models at runtime (SM@RT). This leads us to the actual question: What can such safety models look like? The answer is not at all easy to give since there exist fundamentally different approaches. And if you want to deal with the topic in more detail and begin to browse the state of research, you’ll initially reap more confusion than insight. You’ll find as many different terms as approaches. You’ll find approaches monitoring physical properties such as the responsibility-sensitive safety approach (RSS) [2] on the one side of the spectrum and on the other side of the spectrum you’ll find sophisticated approaches shifting established safety models such as risk models, safety analysis models and the like into runtime [3][4]. And you’ll find countless grayscales in-between. For this reason, I would like to first take a step back to understand the big picture.

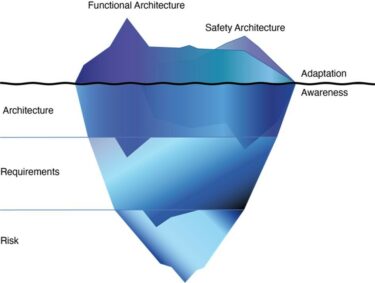

Let's start by roughly recapitulating the basic, product-focused steps of safety engineering. We start with a hazard analysis and risk assessment, derive safety goals, which are refined into safety requirements in a safety concept. And then we build an architecture that implements those requirements. For adaptive safety control, we need to reflect these basic steps as well. We can use the iceberg model shown in Figure 2 to classify the available approaches. The visible part of the iceberg reflects the adaptations that take place at the architectural level, which is the final action that implements the adaptation that can be experienced by users or other systems. As mentioned in the previous part of this series, adaptation means that the system uses different components, sets components to different configurations, e.g., to switch between different algorithms, and/or adjusts parameter values to fine-tune component behavior. These adaptations can affect the functional architecture (the main functionality) and/or the safety architecture (the safety mechanisms, such as safeguards). To this end, the architecture of the managed system must provide the interface to be configured by the managing system as well as the interface that enables the managing system to monitor the current state of the managed system.

Figure 2: Classification Scheme for Adaptive Safety Control Approaches

The real core, the essential substance of adaptation, however, lies beneath the surface of the water. Just as a developer cannot implement a system safely without first assessing risks, deriving requirements and specifying a (safety) architecture, the system cannot adapt itself without first performing analogous analyses.

Architecture-Level Awareness

At a minimum, this requires awareness at the architecture level. Adaptation is impossible without the system at least knowing which components can be used under which conditions in which configuration and with which parameters, so that the overall system adopts a valid overall configuration for the current context. In this case, the risk analysis and the derivation of the safety concept are carried out purely at development time and are not touched again at runtime. However, not only a single architecture is derived, but several variants are defined in the sense of mode management, as discussed in the previous part. The conditions in which situation which variant is to be selected are specified as part of the architecture model, which is the basis for the system’s analysis at runtime. This has the great advantage that with the risk analysis and the safety concept, an essential part of the safety engineering process, remain the responsibility of human safety managers.

Nonetheless, reducing the awareness to architectural level already provides a huge potential. For example, if a yaw rate sensor in an automotive advanced driver assistance system loses its calibration due to a temperature drop in the car's environment (e.g., when the car enters a cold, foggy valley), it must be recalibrated. Therefore, the configuration is changed to replace the sensor with an estimator. The estimated yaw rate will be of lower quality, which will affect the dependent components, which will have to adapt accordingly, resulting in a chain reaction. This can be done by changing their configuration (e.g., switching to a different algorithm) and/or by adjusting the parameters of these algorithms. Another example of this kind of graceful degradation is an ML-based vision system that detects a high uncertainty or an out-of-distribution problem. As a consequence, the system must adapt to use different sensors, adapt the fusion algorithms, or adapt the planning algorithm, etc.

Prof. Dr. Mario Trapp: "Adaptation is impossible without the system at least knowing which components can be used under which conditions in which configuration and with which parameters, so that the overall system adopts a valid overall configuration for the current context."

Another valuable low-hanging fruit of applying adaptive safety control at the architectural level is the implementation of context-aware safeguards. Safeguards check invariants that must hold for the system. But even though approaches such as the Responsibility Sensitive Safety (RSS) approach [2] for automated driving are often called "dynamic safety assurance" or the like, they are still just conventional safeguards without any kind of ability to adapt to the context. In fact, such safeguards are the more sophisticated variant of light barriers used to safeguard machines. Just like light barriers, approaches such as RSS are too inflexible, since the invariants to be checked by the safeguards depend on the current environmental conditions, on the context.

That's why approaches like RSS don't work so well for open context scenarios. For example, the maximum deceleration as an important monitoring parameter of RSS should have different values on dry roads than on wet roads with puddles in heavy rain or on snow to avoid overly conservative worst cases without being overly optimistic. That's why there are extensions like risk aware RSS [5] and alternative approaches to RSS like fuzzy safety surrogate metrics [6]. Often, however, these approaches do not explicitly separate the managed system (the safeguard) from the managing system (the adaptation logic), so some additional work is needed to obtain an adaptive safety control that avoids the drawbacks of implicit adaptation that I mentioned in the previous parts of this series. Nonetheless, this way of adapting a safeguard can be realized at the architectural level.

All these use cases can already be realized at the architecture level without passing too much responsibility from the human to the system. And at the same time, such adaptation approaches are comparably easy to implement. A common approach to realize this kind of awareness at the architectural level is to shift modular architectural models, such as contract-based models, to run-time [3]. To this end, we can specify a contract for each possible configuration of a component, and we can specify the conditions that need to be satisfied to take on a configuration by defining assumptions in the contract. Using the guarantees specified as part of the contract, we can define the impact of assuming a configuration on the output of the component. Sophisticated contracts are not limited to specifying assumptions and guarantees based on the component's inputs and outputs. It is also possible to model assumptions about the environment. This means that it is possible to specify that certain configurations are only valid under certain environmental conditions, such as dry roads or a maximum speed.

Requirements level awareness

In some cases, however, it is not sufficient to limit the analysis to the architecture but requires a deeper awareness at the level of safety requirements. It is sometimes difficult to determine exactly where the architecture level ends and the requirements level begins, since component specifications are also a type of requirement. But as a simple rule of thumb: As soon as the system adapts elements of the requirements in your safety concept at runtime, the system must also have a corresponding model at the requirements level available at runtime.

Taking the previous example of context-aware safeguards, the monitored invariants are nothing more than formalized requirements that are checked at runtime. For example, the requirement to avoid a head-on collision with another car is refined into a requirement to maintain a minimum distance that depends on various parameters. Approaches like RSS formalize these requirements to check them at runtime. Strictly speaking, the adaptation of the safeguards would have to be preceded by an adaptation of the requirement that is checked by the safeguard. As long as the requirements are this close to the architecture, it is often valid to skip this step and specify the adaptation at the architecture level. However, it must be proven during development which parameter values of the safeguards are safe in which situations. It is therefore advisable to explicitly consider the requirements and the validity of their adaptation at least during development.

The story of resilience

Part 1:

Understanding resilience

Part 2:

The Complexity Challenge

Part 3:

Understanding Self-Adaptive Systems

Part 4:

Adaptive Safety Control – why it's the icing on the cake

Let's take another example. If we have to design a safety concept for a people mover that has to maneuver in crowded areas, we might want to be able to specify completely different safety concepts as alternatives: While the vehicle is moving at more than 5 km/h, safety concept A is active, which means that the vehicle will stop as soon as pedestrians get too close to the vehicle. In a crowded area, however, this would mean that the vehicle wouldn't have a chance to get going again. Therefore, safety concept B is activated in a standstill, which means that the system actively monitors the attention of pedestrians. Without getting too close to pedestrians, it will slowly move forward into gaps and monitor whether people are moving out of the way - just as a human driver would. Safety concepts A and B are obviously completely different approaches, requiring different system components and configurations. Therefore, adapting to one of the concepts and adjusting parameters such as minimum distance or maximum speed requires aware reasoning at the requirements level.

An explicit analysis at the requirements level has the additional advantage of a layered architecture. This decouples the requirements from the architecture, so that the adaptive safety concept can be implemented by different architectural variants, giving more freedom at the architectural level. In an adaptive system, this even means that the system first adapts the requirements. In a second step, it searches for the architectural configuration that best satisfies the requirements. This makes our system more flexible, and we can easily add or change a hardware or software component (configuration). And the adaptation would automatically benefit from the newly available options without touching the requirements layer.

Risk Awareness

The more we climb down the requirements tree to its roots, the more aware the system becomes, the more flexible it becomes, the more responsibility is shifted from human engineers to the system. And the less likely it becomes that we will be able to assure the safety of the system. Once we’ve reached the top-level safety goals, we’re already touching the level of risk, which is the ultimate input for any safety concept. But even though many approaches are called "dynamic risk analysis," "dynamic risk management," or "dynamic risk assessment," to name a few, they do not actually allow the system to reason at the level of risk.

Taking the dynamic safety management approach described in [4] as an example, we dynamically evaluate risk parameters such as exposure, controllability, and severity. However, this is nothing more than an approach to adapting top-level safety requirements based on risk-level parameters.

We define parameters of the operational design domain that the car uses at runtime to check whether it is in a certain operational situation or not. If situations can be excluded, the system can also exclude any top-level safety goals that are only relevant in those situations. And for example, if we have a requirement like "the car must not accelerate more than <x> m/s2 for more than <y> s", we do not re-evaluate the controllability or adjust the severity of <x> and <y>, but rather define mathematical rules how to calculate the parameters of the requirement depending on the current context.

"The system does not reason about risk, we reason about risk and define rules that the system will follow."

Executive Director of the Fraunhofer Institute for Cognitive Systems IKS

Of course, this is based on considering aspects such as controllability and severity. But the big difference is that we as engineers define these rules, not the system. We decide that these rules lead to acceptably safe behavior, that the implementation of these rules, the overall adaptation, leads to a residual risk that is lower than the acceptable risk. The system does not reason about risk, we reason about risk and define rules that the system will follow. The same is true for more kinematic models, such as predictive risk estimation by estimating collision probabilities [7].

While it is of course correct to call any combination of probability of occurrence and severity risk, it is still nothing more than a metric used as a runtime monitor. Such approaches do not reason about risk in the sense of hazard analysis and risk assessment, not in the sense of assessing whether the system is safe enough. They calculate collision probabilities, but we as engineers have reasoned that following these mathematical models leads to an acceptable risk, to a safe enough system.

As philosophical as this distinction may seem, there is a big difference between having a system reason about what is an acceptable risk, and having a system follow human-defined rules that result in an acceptable risk. There is an even bigger difference between a system deciding whether a risk is acceptable and a human deciding. If we use these standards, we do not find any approaches today that actually give the system awareness at the risk level. Today, adaptive safety control moves right up to the interface with risk, but it always stays on the side of requirements. And that is good.

Summary

Today's systems must operate in an open context. We must acknowledge that we can't anticipate every thinkable or unthinkable situation. Instead, we must build resilient systems, systems that are aware of their environment, their own state, and their goals, and that adapt accordingly. A key component of resilience is assuring safety. To this end, we add adaptive safety control as an essential component of a resilient architecture.

In fact, there are several different approaches, and because there's no commonly accepted taxonomy, it's easy to get confused when entering the field. The iceberg model provides a simple entry point to classify different approaches. Ultimately, we must assure the safety of resilient systems. Therefore, it is essential that decisions made by the system follow the same principles as decisions made by human engineers. Therefore, it is advisable to make models such as architecture models and safety concept models available at runtime. This leads to the idea of Safety Models at Runtime (SM@RT). In principle, any use of models is possible. But as a basic rule, the underlying analysis must always include the architectural level. This solid foundation can then be extended with an analysis at the safety requirements level. And even though risk is an essential input for adapting high-level requirements, the reasoning about risk, and especially the decision about acceptable risk and whether a system is safe enough, should remain with human engineers.

Overall, resilience offers great potential for systems that optimize utility while preserving safety, even when operating in uncertain, open contexts. But designing such systems in an ad hoc manner will create more problems than it solves. Thus, engineering will be a key to developing resilient systems, and thus to realizing the potential of cognitive systems.

References

[1] D. Weyns, An introduction to self-adaptive systems: A contemporary software engineering perspective. Wiley, 2020.

[2] S. Shalev-Shwartz, S. Shammah, und A. Shashua, „On a formal model of safe and scalable self-driving cars“, ArXiv Prepr. ArXiv170806374, 2017.

[3] M. Trapp und D. Schneider, „Safety assurance of open adaptive systems–a survey“, in Models@ run. time, Springer, Cham, 2014, S. 279–318.

[4] M. Trapp, D. Schneider, und G. Weiss, „Towards safety-awareness and dynamic safety management“, in 2018 14th European Dependable Computing Conference (EDCC), 2018, S. 107–111.

[5] F. Oboril und K.-U. Scholl, „Risk-aware safety layer for av behavior planning“, in 2020 IEEE intelligent vehicles symposium (IV), 2020, S. 1922–1928.

[6] A. Salvi, G. Weiss, M. Trapp, F. Oboril, und C. Buerkle, „Fuzzy interpretation of operational design domains in autonomous driving“, in 2022 IEEE intelligent vehicles symposium (IV), 2022, S. 1261–1267.

[7] J. Eggert, „Predictive risk estimation for intelligent ADAS functions“, in 17th international IEEE conference on intelligent transportation systems (ITSC), 2014, S. 711–718. doi: 10.1109/ITSC.2014.6957773.