Read next

Uncertainty in autonomous systems, part 2

Where errors can creep in

If uncertainties in an autonomous system are not dealt with, they impair the system’s functionality and can lead to safety risks. In part 1 of our series on uncertainty, we presented a system for classifying uncertainties. In part 2, we will look at specific sources of uncertainty that have been noted in the relevant literature.

© iStock/Wicki58

Identifying all of the uncertainties that could impair an autonomous system’s ability to make decisions is an important step in the development of that system. The classification system presented in part 1 of this series can be useful here, as it includes many elements that could otherwise be easily overlooked.

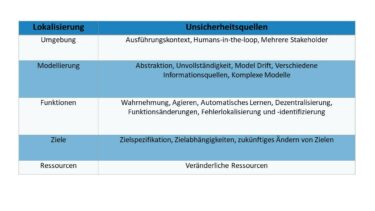

In addition to taxonomies for uncertainty, researchers have also looked extensively at specific sources of uncertainty. These studies provide an even greater understanding of how uncertainty impairs the functionality of autonomous systems. Table 2 shows a collection of sources of uncertainty, grouped by their position in the system. We will look at them in more detail below.

© Fraunhofer IKS

Table 2: Sources of uncertainty, grouped by position in the system

Environment

When we talk about uncertainty in the environment, we are not referring to how the system perceives its environment but rather how familiar the system’s developers are with the environment in which it is to be deployed. For many complex systems, the deployment context itself has too many variables to make a full description of it possible. An important factor here, if the system will be working with humans in the loop, is the unpredictable behavior of people. Uncertainty in the environment can also occur if the context is highly heterogeneous and defined by multiple stakeholders. This sort of situation can arise if, for example, robots produced by different manufacturers need to work together to complete a task.

Modeling

Models are essential for enabling autonomous systems to achieve their tasks, because the real-world operations are too complex to be processed in detail. Creating a representation of the real world in the form of a model enables technical systems to make decisions despite this complexity. However, this deliberate abstraction of details contains a hidden source of uncertainty, as the model will not behave in precisely the same way as the real world. If a process has not been understood in sufficient detail, this can lead to an incomplete model being created, one which lacks important details. Another problem emerges from the life cycle of a system: If the model is not regularly updated, model drift can lead to the model no longer accurately representing real circumstances. It can also be difficult to harmonize models that draw on different sources of information, such as discrete and continuous models, which are used in the development of the software. Ultimately, this can all lead to models being too complex and therefore difficult to understand and verify.

Functions

The functions of the autonomous system can be affected by many sources of uncertainty. Both the perception and action functions can have uncertain impacts and side effects, particularly if they are based on automated machine learning. In decentralized architectures, where multiple components are combined to perform a function, there is also a risk of emergent behavior. Function alteration following software updates can also introduce new uncertainties into the system. Finally, hardware and software failures can also impact the ability of the system to make decisions. Promptly localizing and identifying errors provides more certainty as to what the system is currently able to achieve.

Goals

A defining characteristic of autonomous systems is that they work independently towards goals that are set in advance. To ensure that the system behaves as expected, the goal specification must be accurate and precise. Where multiple goals interact with each other, this can be a further source of uncertainty: For example, unrecognized dependencies between goals can have unexpected effects on goal prioritization, as can future goal alteration if the existing goals are no longer relevant.

Resources

Just as the goals of an autonomous system may be altered, the resources available to it can change. Alterations to the availability or utilization of individual resources, such as processing capacity, can impact the service quality of the system. This particularly affects complex systems, as they cannot be synchronized in real time.

Uncertainty has become a major area of focus in research in recent years. In practical contexts, too, it is also becoming a more frequent issue for our industrial partners. Here, the uncertainties that arise often affect the functionalities of systems. Sources of uncertainty relating to perception have a particularly significant impact on systems. But, as we have seen in both parts of this series, other aspects of uncertainty are also important. The Fraunhofer Institute for Cognitive Systems IKS is working towards a comprehensive system for uncertainty management that examines all uncertainty factors in minute detail.