Read next

Uncertainty in autonomous systems, part 1

Not just a question of perception

Cognitive systems are often affected by the problem of uncertainty, particularly if those systems use machine learning to perceive their environments. A system’s sensors are not the only source of uncertainty, however. The life cycle of any highly complex system involves a large number of decisions that are vulnerable to the effects of uncertain knowledge. Part 1 of our series on uncertainty focuses on the latest developments in this technology.

© iStock/Wicki58

The decisions that people or autonomous systems make are based on the knowledge that is available to them at the time. If they have access to all of the information, it is easy to make optimal decisions. But in the vast majority of cases, that knowledge is incomplete, or the facts can only be estimated. These situations are said to be affected by “uncertain knowledge.” Uncertainty is defined as follows:

“Any deviation from the unachievable ideal of completely deterministic knowledge of the relevant system.[1]”

The authors of the article that proposed this definition belong to the community of researchers who focus on using models to support decision-making. Politicians, for example, call on their assistance when assessing laws with wide-ranging consequences. But uncertainty is just as important an issue for autonomous systems as well. Safety-critical systems in particular have to make decisions that have a significant impact on their environment and that could potentially even put people’s lives in danger.

Identifying uncertainty

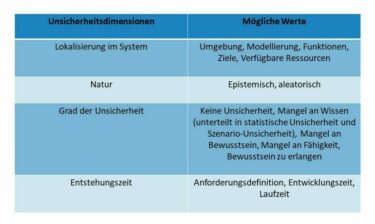

As such, it is crucial when developing an autonomous system to identify every type of uncertainty that could impair the ability of the system to make decisions. To help with this, various researchers have developed a taxonomy that classifies uncertainties according to several dimensions. This classification system is summarized in Table 1. There are four dimensions that are frequently mentioned in the literature, which are explained below.

© Fraunhofer IKS

Table 1: Uncertainty classification system

Position in system

This dimension refers to where the uncertainty appears in the system. There are five conceivable points where this can happen:

- Environmental uncertainty exists where the characteristics of a system’s deployment environment are not fully known. For example, one barrier to achieving safe autonomous driving is the myriad of border situations that systems have difficulty perceiving, such as regional variations in road markings.

- Autonomous systems often base their decisions on models because the real situation cannot be fully replicated to the smallest detail. However, the choice of modeling method contains significant hidden model uncertainty, which can occur, for example, if the level of abstraction is too high.

- In addition to the environment and the modeling method, the functions of the autonomous system itself are also affected by functional uncertainty. Apart from the previously mentioned issues of perception, actions that the system takes can also have consequences that are difficult to predict, or such actions may have a certain probability of failing.

- The goals of the autonomous system are just as important as the grounds on which it makes decisions. Goal uncertainty plays a role here, preventing the system from behaving as expected.

- Last but not least, the system needs certain resources in order to process a decision. Resource uncertainty has a similarly negative affect on the system’s ability to make decisions if individual resources are unavailable, for example because of random hardware failures.

Type of uncertainty

The literature distinguishes between two key types of uncertainty: aleatoric and epistemic. Aleatoric uncertainty (from the Latin alea meaning “dice”) emerges as a result of unpredictable, inherently random processes in nature. This includes the uncertainty that results from human behavior. In contrast, epistemic uncertainty results from a lack of knowledge. This type of certainty could therefore be remedied through additional efforts to gather the missing knowledge.

Degree of uncertainty

Not all of the information available to an autonomous system can be handled in a uniform manner. Importantly, uncertainties do not fall into simple black and white categories of known or uncertain. Instead, the degree of uncertainty can be rated on a scale: The scale ranges from none, lack of knowledge (but aware of the lack) and lack of awareness through to inability to gain awareness. (See table.) Some authors make a distinction within lack of knowledge. Statistical uncertainty can be expressed using statistical methods such as probability theory. Scenario uncertainty, on the other hand, can only be described by listing all of the possible outcomes.

Timing of emergence

Finally, uncertainty is not simply an issue that emerges once the system is operating. It can appear at any point in time within the software life cycle and, if not remedied, impact later phases. For example, an insufficient understanding of the system and the environment in which it will be deployed during the definition of requirements leads to the requirements being defined incorrectly. Programming errors during development reduce confidence that the system will work correctly. Both then impact the autonomous system’s ability to make decisions whilst operating.

This classification system provides a detailed summary of a large number of uncertainties that can impact autonomous systems. The work of the Fraunhofer Institute for Cognitive Systems IKS and its industrial partners has shown that the classification system is also applicable and useful in practical contexts. For example, our researchers were able to use the system to analyze what tests were necessary to confirm the resilience of a logistics robot. A comprehensive understanding of uncertainty is therefore an essential foundation for protecting autonomous systems against behaving incorrectly. As such, uncertainty management is a core research field for Fraunhofer IKS.

[1] W.E. Walker et al. “Defining Uncertainty: A Conceptual Basis for Uncertainty Management in Model-Based Decision Support,” 2003.

The second part of the blog post focuses on the sources of uncertainty.