Read next

Safety Engineering

Generative AI as a gap filler

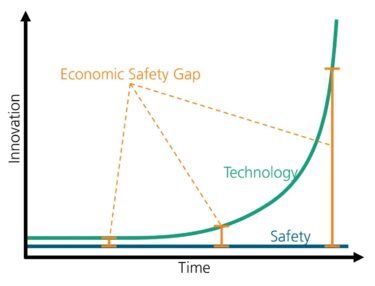

Artificial intelligence opens up new economic opportunities for companies. However, the integration of AI raises significant safety concerns in safety-critical systems. This results in a gap between economic potential and safety, the economic safety gap. But generative artificial intelligence (Generative AI, GenAI) can help close it.

© iStock/Lucyna Koch

Safety engineering is now an indispensable part of product development. New technologies are revolutionizing the possibilities available to us, but they also pose major challenges to proven safety methods. The so-called “economic safety gap” arises when new technologies (especially AI-based systems) are developed faster than existing safety methods can evaluate, safeguard, and certify them.

Conference Paper, 2025

Mind the Economic Safety Gap

Publica.fraunhofer.deThis growing gap between technological progress and safety certification is creating a potentially dangerous economic hole. Innovative technological solutions cannot be implemented because the analyses recommended in existing standards and regulations cannot be applied, or can only be applied with great effort. This effort drives up development costs and makes many potential applications uneconomical. An effective solution to this dilemma requires the development of innovative methods in safety engineering to reduce costs.

Generative AI as a solution?

One promising solution is the use of generative artificial intelligence (GenAI), a technology that can independently generate new content, texts, or designs based on large amounts of data. Well-known examples include systems such as ChatGPT, which process information from natural language and generate new, context-related content.

In the context of safety engineering, GenAI can help to accelerate and partially automate safety-related processes. A prototypical GenAI-supported safety assistant could, for example, perform the following tasks:

- Hazard analysis and risk assessment: Automation of hazard identification and assessment of associated risks.

- Derivation of safety requirements: Efficient generation of requirements based on system architecture and hazards.

- Planning safety-related validation and verification measures: Support in planning tests to demonstrate compliance with defined safety requirements.

By utilizing these technologies, safety engineers can save valuable resources and improve the quality of their safety cases. This allows them to focus more on complex applications, which are increasingly in demand today.

Reliability as a challenge

Despite the promising approaches, several challenges remain in research. This is because safety analyses form the basis for the development of safety-critical systems and must therefore be carried out thoroughly and reliably. So if GenAI is to be used for safety analysis, the technology needs to be further improved. This includes, first, the development of measures to make large language models (LLMs) more reliable. And second, the fine tuning of such models using domain-specific data should also be investigated. Both approaches complement each other to form a software tool that is specifically designed to meet the requirements of safety engineering. Close collaboration between AI experts and security specialists is needed to further refine the technology and build trust in its results.

One promising approach is the development of customized retrieval augmented generation (RAG) systems that can efficiently retrieve relevant information and make it available for automated analysis. Initial results show that these approaches can significantly improve the quality of identified hazards and safety requirements.

While technological capabilities are growing exponentially, safety developments are progressing slowly – the growing gap between the two poses an economic safety risk.

When the economic safety gap shrinks

Generative AI can provide targeted support for safety engineering, for example through automated risk analyses and the derivation of safety requirements from system architectures and data. This significantly speeds up safety checks and reduces the economic safety gap: innovations can be implemented more quickly without compromising safety standards. It remains crucial that the results are reliable, traceable, and continuously validated in order to raise the safety of technological applications to a new level.

Safe Intelligence Day 2026

GenAI will also be the focus of Safe Intelligence Day 2026, which will take place on February 5, 2026, at Fraunhofer IKS in Munich. Learn more about the added value LLMs can bring to various applications. The event also offers the opportunity to exchange ideas with other participants and scientists from Fraunhofer IKS about opportunities, challenges, and possible solutions.

In view of the constantly evolving technological landscape, it is therefore essential that safety engineering not only keeps pace, but also proactively develops new methods and tools to ensure the safety of future technologies. Only in this way can the economic safety gap be closed and the innovative strength of safety-critical applications be secured.