Read next

Concept-based Models

How visual concepts help to understand an AI’s decision

Modern AI models have demonstrated remarkable capabilities on various computer vision tasks such as image classification. Their decision-making process, though, remains mostly opaque (black box problem). This can be particularly problematic for safety-critical applications like medical imaging or autonomous driving. Fraunhofer IKS explores ways to increase the transparency of this black box.

© iStock/yul38885

The use of AI in autonomous vehicles or in medical technology usually requires some level of interpretability. In addition to meeting safety requirements, interpretability can also help to reveal and address model insufficiencies during development and deployment. For example, a radiologist could use the explanation of an AI’s decision for judging its trustworthiness in making a diagnosis.

However, understanding the decision-making process of modern deep neural networks (DNNs) can be challenging. A common approach in computer vision is to produce saliency maps which highlight regions in the image relevant to the prediction. However, these explainability methods are prone to error and often difficult to interpret through visual inspection [1]. In recent years, there have been increasing efforts in explaining models in a human-interpretable way using high-level visual concepts (e.g. [2]–[5]).

What are visual concepts?

Concepts are defined as features in an image that are relevant for the classification task. For example, for traffic sign classification, the shape of a traffic sign, its color, or symbols could be defined as concepts (see Figure 1).

© Fraunhofer IKS

Figure 1: Examples of concept annotations for different traffic signs. (The images are part of the GTSRB dataset [6].)

Concept-based models aim to predict such high-level concepts in an input image and use these concepts to classify the image. Concept Bottleneck Models [2], as an example for these types of models, consist of a concept and a task model. The concept model, usually a Convolutional Neural Network (CNN), outputs concept predictions based on an input image. The task model, e.g. a simple neural network for classification (Multi-Layer Perceptron, MLP), classifies the image based on the concept model output.

For traffic sign classification, the concept model would be trained to detect concepts like the shape, color, or numbers on the sign. The task model would then classify the traffic sign based on the detected concepts (see Figure 2).

© Fraunhofer IKS

Figure 2: Classification process for concept-based models.

The training of the concept model requires concept annotations in the form of binary labels, i.e. concept is "present" or "not present", for each defined concept and image. For complex concept-class relations, the task model can be trained on data. In a simpler case it can also be modeled by a domain expert with decision rules without any training. For example, one of the rules could be "If concept A, B, and C are present, the image should be classified as X."

Benefits of concept-based models

Concept-based models increase interpretability by making the decision-making process more transparent, while achieving a performance similar to standard image classification models [2]. They give insights into which concepts are detected in the image, as well as which of these concepts, and in which combination, are relevant for the class prediction. This level of interpretability can help to reveal insufficiencies of the model in its decision-making process and provide transparency in accordance with common safety guidelines (e.g. EU AI Act [7]).

The intermediate concept layer not only offers interpretability, but also allows for intervention during deployment. Any changes made to the concept predictions will also influence the class prediction. Thereby, a domain expert could verify potentially incorrect concept predictions on challenging images, which in turn could prevent misclassification in the task model.

Current Challenges

Although these benefits are promising, before we can use concept-based models in a safety-critical context, a few challenges still need to be overcome.

A central challenge for explainability methods is the evaluation of the explanation itself, i.e. how to verify that the explanation correctly presents the decision-making process of the AI model. In the context of concept-based models the difficulty is in providing evidence that the concept predictions truly represent the corresponding concepts in the image. Concept predictions might contain more information than just the presence of the concept, or might be based on other correlated concepts or features in the image. Consequently, any explanations using these faulty concept predictions will not be accurate.

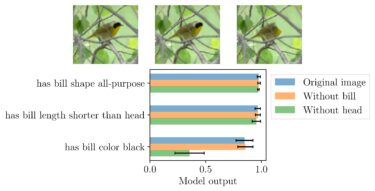

Figure 3: Output of a concept model for images where the bill or the whole head of the bird is removed. [8]

Figure 3 illustrates this problem. It shows the output of a model trained on concepts of birds for three different images: the original image and two manipulated images, one without the bill and one without the head of the bird. We would expect the prediction for concepts describing the bill of the bird to change from “present” to “absent” (model output < 0.5) when this part is no longer visible. However, we see that these concepts are still predicted with high confidence even though the bill or even the whole head is removed from the image. This issue is usually not detected with classical metrics, such as test accuracy (fraction of correct predictions), evaluated on data with similar properties as the training data. However, recently additional concept model evaluation methods have been proposed which can help to solve this evaluation challenge (e.g. [8], [9]).

The blog post refers to the paper "Concept Correlation and Its Effects on Concept-Based Models" by Lena Heidemann, Maureen Monnet, Karsten Roscher.

In the recent publication „Concept Correlation and Its Effects on Concept-Based Models“ [8] the challenge of concept correlations for concept-based models is investigated. It is possible for a concept model to leverage correlations in the dataset to predict certain concepts based only on the presence of the correlated concepts while achieving high test accuracy. This can lead to misclassifications in rare scenarios where the correlated concepts appear independently. Especially in safety-critical applications, such as medical imaging, each concept prediction should be based on the presence of that particular concept in the image. Our analysis shows that this problem exists for many applications of concept models.

Additionally, we present simple solution approaches, such as loss weighting, which show promising initial results. For a more technical and in-depth description of the problem and potential solutions, please have a look at the paper here/[8]. Meanwhile, the team in charge at Fraunhofer IKS continues to work on addressing these challenges and making computer vision models more interpretable.

[1] J. Adebayo, J. Gilmer, M. Muelly, I. Goodfellow, M. Hardt, and B. Kim, ‘Sanity Checks for Saliency Maps’, in Advances in Neural Information Processing Systems, 2018. Available: https://proceedings.neurips.cc...

[2] P. W. Koh et al., ‘Concept Bottleneck Models’, in Proceedings of the 37th International Conference on Machine Learning, 2020, pp. 5338–5348. Available: https://proceedings.mlr.press/...

[3] Z. Chen, Y. Bei, and C. Rudin, ‘Concept whitening for interpretable image recognition’, Nature Machine Intelligence, vol. 2, no. 12, Art. no. 12, 2020, doi: 10.1038/s42256-020-00265-z.

[4] M. Losch, M. Fritz, and B. Schiele, ‘Interpretability Beyond Classification Output: Semantic Bottleneck Networks’, arXiv:1907.10882, 2019, doi: 10.48550/arXiv.1907.10882.

[5] C. Li, M. Z. Zia, Q.-H. Tran, X. Yu, G. D. Hager, and M. Chandraker, ‘Deep Supervision with Intermediate Concepts’, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 41, no. 8, pp. 1828–1843, 2019, doi: 10.1109/TPAMI.2018.2863285.

[6] J. Stallkamp, M. Schlipsing, J. Salmen, and C. Igel, ‘The German Traffic Sign Recognition Benchmark: A multi-class classification competition’, in The 2011 International Joint Conference on Neural Networks, 2011, pp. 1453–1460.

[7] European Commission, ‘Proposal for a regulation of the European Parliament and of the Council laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain Union legislative acts’, COM/2021/206 final, 2021.

[8] L. Heidemann, M. Monnet, and K. Roscher, ‘Concept Correlation and Its Effects on Concept-Based Models’, presented at the Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 4780–4788. Available: https://openaccess.thecvf.com/...

[9] M. E. Zarlenga et al., ‘Towards Robust Metrics for Concept Representation Evaluation’, arXiv, arXiv:2301.10367, Jan. 2023. doi: 10.48550/arXiv.2301.10367.

This project was funded by the Bavarian State Ministry of EconomicAffairs, Regional Development and Energy as part of the project thematic support for the Development of the Institute for Cognitive Systems.