Read next

Artificial Intelligence

How constraint-aware models can make industrial operations safe and efficient

Achieving safety with AI systems requires a comprehensive and collaborative approach, including technical, ethical, and regulatory considerations. A central aspect is designing AI systems that comply with safety requirements defined in constraints.

© iStock/Nils Knopf

Defining safety requirements as constraints can be useful in many applications. Constraints ensure that the system will keep a safe operation by setting limits on various parameters, such as temperature, pressure, or distance. For instance, in petrochemical plants, where the primary goal is to produce as much by-product as possible (e.g., petrol) while minimizing costs such as energy, parameters like the temperature and pressure levels must be bounded to guarantee safety. The same is valid for other safety-critical applications, such as power systems, robotics, and avionics.

Solving this problem in industrial applications is mostly done by using control theory, with Model Predictive Control (MPC) as one of the most popular methods. However, the possibility of using AI opens the door to new levels of automation. Classical controllers do not scale well with the huge data throughput experienced with the highly integrated production chains expected in the fourth industrial revolution. This is associated with the high flexibility Machine Learning (ML)-based systems offer. Traditional methods rely heavily on manual tuning, adjustment, and integration, whereas fitting and adjusting ML models can be easily integrated into an automated pipeline.

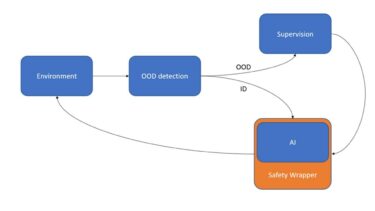

However, obtaining AI systems that respect a set of constraints is not trivial since the algorithms used for training ML models are not meant for constraint optimization. With that in mind, here at Fraunhofer IKS we have proposed a system able to improve constraint satisfaction of AI systems. The proposed framework, shown in the figure below, consists of two elements added to the closed-loop control: a safety wrapper and an out-of-distribution detection that triggers external supervision in unknown situations. The framework is detailed below.

The framework uses a safety wrapper and an out-of-distribution detection that triggers external supervision in unknown situations.

Safety wrapper

The first aspect of our framework is ensuring that the AI system can respect the system-level constraints. To do so in the least intrusive way, a safety wrapper is added to guarantee that the decisions taken by the AI system conform to the safety requirements. The safety wrapper module is a crucial element of the proposed framework for improving constraint satisfaction of AI systems, and is designed to enhance the safety and reliability of the AI system by adding an additional layer of protection around it.

When dealing with dynamic systems, where the constraints are usually defined as continuous functions, the safety wrapper can be obtained with a dynamic model that predicts the impact of the chosen actions, i.e., it predicts the next state based on a given action. If this prediction indicates the action will result in an unsafe outcome, constrained optimization can be used to find action alternatives that lay inside the safe region. On the other hand, for discrete-event systems, the safety wrapper can be designed using a formal specification language, such as linear temporal logic.

External monitoring through OOD (Out of Distribution) detection

Despite focusing on guaranteeing constraint satisfaction, other safety concerns should also be addressed before deploying an AI system. When deciding on using an AI approach, it is expected that such systems can solve complex problems in open-ended environments, but making that possible depends on making sure the system still performs safely even when facing situations that are out of the scope of what was experienced during the ML model training, or the operational domain considered during the design of the safety wrapper. However, ML models are known to have a poor ability to generalize beyond the data they are trained on and are not always able to make accurate predictions if the inputs differ (sometimes even in subtle ways) from the data used for training, known as Out-of-Distribution samples that are a result of distributional shifts in the data.

To add another layer of safety into the proposed framework, a monitor responsible for OOD detection is included and triggers an external supervisor that will give instructions to the ML controller to guide the system under unexpected situations or even take control and steer to a known safe region. The framework is agnostic to the supervisor's nature, which may include rule-based systems, controllers designed with control theory, a safe stop, or even a human supervisor.

Conclusion

Achieving safe AI systems remains a significant challenge that requires continuous research and development efforts. In this blog post one of the safety frameworks developed here at Fraunhofer IKS, which includes two levels of safety verification: a safety wrapper that makes the ML controller respect safety constraints and an OOD detector that triggers external supervision when facing unknown circumstances was presented.