Read next

Determining uncertainty, part 1

Uncertainties we can rely on

Complex machine learning (ML) processes present researchers with a problem: Eliminating errors before autonomous systems are put into operation and reliably identifying errors at runtime are laborious and challenging processes. At present, this severely restricts the use of machine learning in safety-critical systems and necessitates new approaches in order to benefit from the advantages offered by ML in these areas in the future.

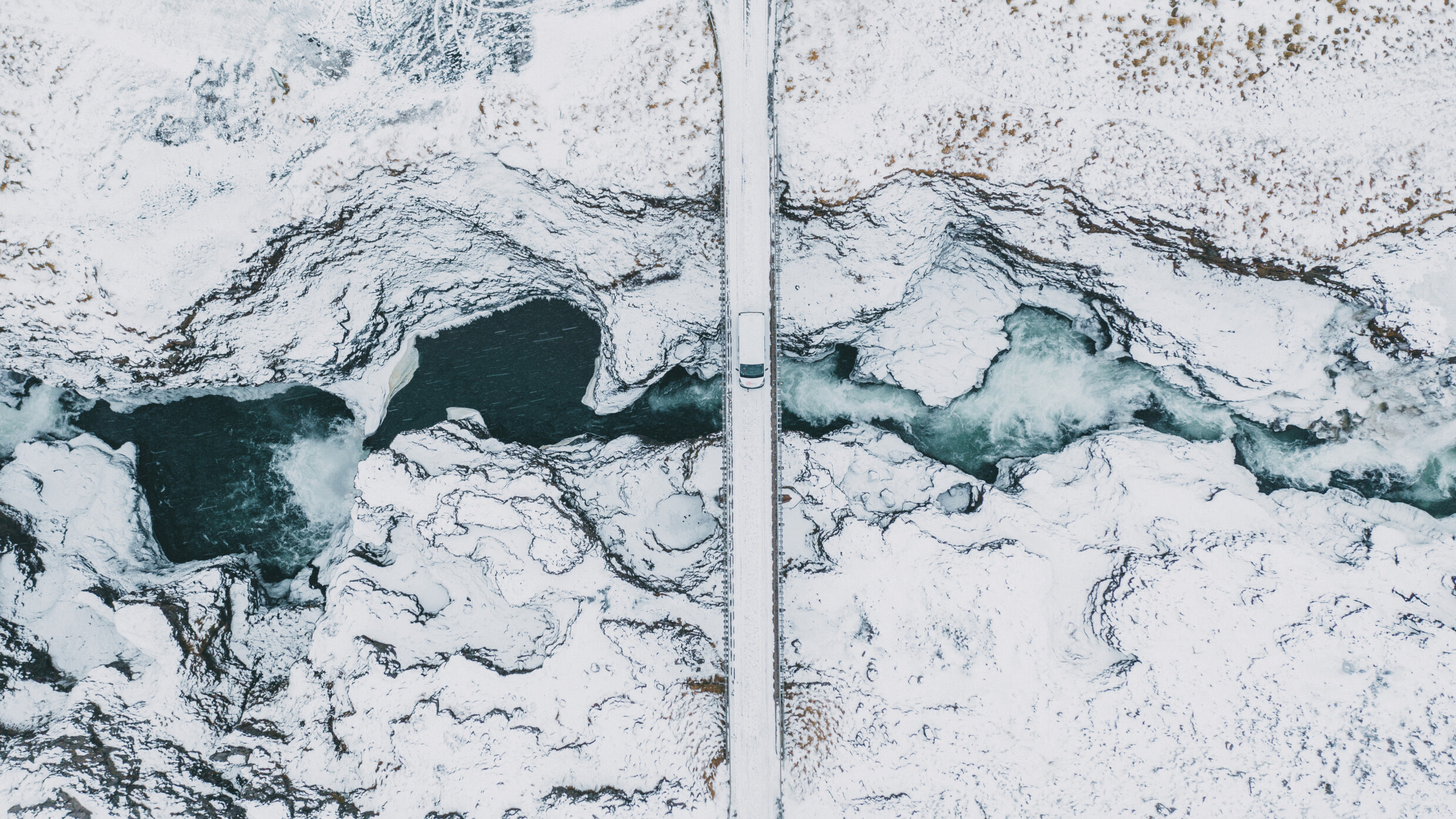

© iStock.com/Oleh_Slobodeniuk

Before artificial intelligence (AI) can be used in safety-critical applications such as self-driving cars or the automated diagnosis of clinical imagery in medicine, it must be made safe. Reliable uncertainty values for the predictions made by these systems therefore need to be established. Let’s take an example: An autonomous vehicle discovers an object on the road with one of its sensors and has a fraction of a second to decide whether it is a person or something else. The uncertainty values provide information on how reliable the current predictions of the AI are in this case. For other components of the safety system as a whole, these values serve as determinants in the calculation of the current safety status.

© Fraunhofer IKS

AI-based 2D object recognition with uncertainty values

Determining these uncertainty values is a challenging task. Many ML models, especially neural networks, can be visualized as highly complex nested functions that, in the case of classification for example, assign a category (label) to an image. When these models are being trained, the parameters are changed such that the confidence values — which roughly serve as a measure of the certainty of a statement — increase the correct categories. However, although these confidence values often have the characteristic that they are between 0 and 1 and add up to 1, they cannot simply be interpreted as probabilities.

Neural networks, despite being very powerful in terms of performance, can often express very high confidence values for false statements and be poorly calibrated. A poorly calibrated model is one in which the average confidence value does not match the average truth value of the predictions. In other words: If a model is 70 percent confident on average, this means that 70 percent of its predictions should be true. Some changes in the design and training of neural networks have significantly improved the general performance of these models, but have also had the adverse effect of making them less well calibrated in that their confidence values have tended to be too high (overconfident).

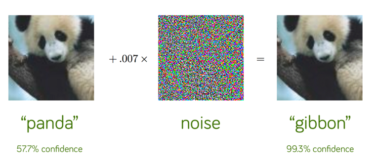

Machine learning can be outsmarted

It is also generally known that tiny targeted changes in the input can be used to outsmart many ML models. Using these types of “adversarial attack”, for example, models can systematically be made to predict a desired category with a very high confidence value even if it is very different from the true category.

Another reason for the frequently unreliable confidence values is that models are trained on datasets that do not represent a full description of every possible state of reality. If a model has only been trained on images of dogs and domestic cats, it will be no surprise if it classifies a jaguar as a domestic cat and assigns the classification a very high confidence value. This is because the model is not familiar with the concept of a jaguar and the jaguar looks much more like a domestic cat than a dog.

© Goodfellow, I. J., Shlens, J., & Szegedy, C. (2014).

AI: Minimal image noise turns a panda into a monkey

Fraunhofer IKS is making AI more self-critical

It can also be a problem that many established machine learning processes accept certain individual values as parameters instead of taking the distribution of those values as a basis. That is not optimal because there is no reason to assume that an individual rigid model is the best description of the existing patterns and relationships. This increases the risk of the model being misled.

In the case of autonomous driving, the above problems can have fatal consequences. It is therefore highly possible that a person in a costume or an unusual pose might not be identified as a human, which could lead to an accident.

To prevent such errors, we at Fraunhofer IKS are developing methods to allow decisions on cases that are very different from the concepts learned from the training dataset to be assigned higher uncertainty values. This allows the model to provide a better quantification when its predictions are uncertain.

With its expertise in this area, Fraunhofer IKS is a member of the ADA Lovelace Centers for Analytics, Data and Applications, a collaborative platform that includes the Fraunhofer institutes IIS, IISB and IKS as well as Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU) and Ludwig-Maximilians-Universität München (LMU Munich).

Conference Paper, 2019

Managing Uncertainty of AI-based Perception for Autonomous Systems

Publica.fraunhofer.deFor information on Fraunhofer IKS's Agebote, please visit our Dependable Artificial Intelligence website.