Read next

Verification of medical AI systems

What do regulations say about your medical diagnostics algorithm?

Regulations and standards for trustworthy AI are in place, and high-risk medical AI systems will be up for audits soon. But how exactly can we translate those high-level rules into technical measures for validating actual code and algorithms? Fraunhofer IKS’s AI verification framework provides a solution.

© iStock/Claudio Ventrella

Picture this: You, a skilled medical data scientist, have developed a medical diagnostics system. It classifies cancerous cell types in medical images with high precision. As usual, you have tested it with test data, and have analyzed it for its failure modes. Now it is time to package it as a software tool and deliver it to the customers. But here comes the big question: Have you covered all the bases before the real-world application, where people’s lives hang in balance?

Regulations and standards ensure safe and trustworthy AI development

AI safety and trustworthiness has always been in the spotlight, and now, with the unveiling of the EU AI Act[1], more than ever. This landmark publication provides a solid foundation for ensuring that every aspect of AI development is meticulously addressed.

Looking back to the medical AI system example, let’s look at EU AI Act, Article 9 (risk management system) clause 2. It mandates the creation of a risk management system for high-risk AI systems, comprising the following steps (cited with paraphrasing):

- Identification and analysis of the known and foreseeable risks;

- Considering the risks during usage in accordance with the intended purpose, and under conditions of reasonably foreseeable misuse;

- Evaluation of risks based on the post-market monitoring system data;

- Adoption of suitable risk management measures.

This is excellent! You now have a reference guideline to ensure trustworthy AI development, amenable to future certification.

Requirements or interpretation of requirements?

However, the journey doesn’t end here. You must now interpret each requirement in the context of your use case – the medical diagnostic system. Moreover, the arguments you provide must be transparent, coherent, and solid enough to be compelling to your customers and certified bodies.

Let’s consider step 2 for the medical image classifier use case: What is the exact intended purpose? How will physicians utilize it? What are potential misuse scenarios? For every case of intended use and potential misuse, you need to explore and document all risks that may emerge. This requires a lot of time, effort, and multidisciplinary collaboration

And that was just the tip of the iceberg! Imagine applying this level of scrutiny to all the EU AI Act requirements, for a bigger project involving multiple companies and more complex AI. Now think about the hurdles of management: Who is responsible for meeting which requirement? How can we draw a crystal-clear roadmap for a safe and trustworthy AI development involving many stakeholders?

Fraunhofer IKS’s solution: AI verification tree

Imagine a tree structure, made of safety claims for AI systems. At the root, we have the overarching EU AI Act articles. A main branch exists with the following statement: “AI system satisfies article 9.2”. It then branches out four items a, b, c, d (in article 9.2). Each sub-branch further divides into sub-branches and so on, until we arrive at the “leaves” of the tree representing hands-on technical tests and code checks. The verification tree covers several AI and application categories. Each category represents a new branch in the tree.

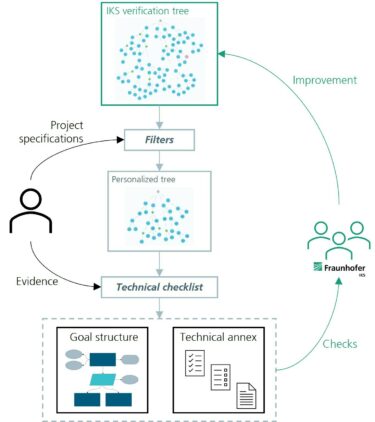

Our approach is coined as “AI verification tree”, a project started at Fraunhofer Institute for Cognitive Systems IKS in 2023. The process of utilizing the tree is illustrated in Figure 1. Here is how it works:

© Fraunhofer IKS

The verification tree facilitates the complex process of AI verification. Users describe their project, receive a customized checklist, and provide technical evidence to substantiate claims.

A user begins by giving the project specifications. With this information, they filter the tree, pruning it down to include only the relevant branches for the project. From there, a checklist is generated outlining all the necessary evidence to substantiate the statements in the tree. The user provides technical evidence to fulfill each item. Once the process is complete, documents are automatically generated, written in accordance with the accepted safety language. They are categorized based on the stakeholder responsible for each claim, as well as the stage of the AI lifecycle in which the claim is addressed.

Meet us at the DMEA in Berlin!

Do you have any questions about our research and our services?

We would be pleased to welcome you to our stand at the DMEA.

The Fraunhofer Institute for Cognitive Systems IKS is focussing on the use of trustworthy artificial intelligence (AI) in healthcare under the motto: Trustworthy Digital Health. One use case is the use of explainable AI in medical diagnostics, for example in the categorisation of vertebral fractures and blood cell classification. Another example: data-efficient AI that supports doctors in analysing medical images for the early detection of cancer. And finally, Fraunhofer IKS is demonstrating how AI based on quantum computing can improve medical diagnoses

Visit us at DMEA 2024 at the Berlin Exhibition Centre, at the Fraunhofer Gesellschaft stand D-108 in Hall 2.2.

We look forward to meeting you!

Developing a unifying verification process for diverse scenarios seems daunting, but we have devised a feasible development plan, relying on our expertise in “safeAI” demonstrated successful in automotive and rail industries. Using the feedback from the projects, we improve and revise the source verification tree accordingly. This feedback process creates a dynamic knowledge base that grows more powerful with each use.

The verification tree is just one component of a larger picture – the verification framework, an ongoing project at Fraunhofer IKS. This framework includes various methods such as quantifying uncertainty in AI system safety assurance, automated tests for deep learning models[2], and simulation tools for causal inference methods[3], among others.

The framework is now up and running! Yet the development has not stopped. The verification tree is continuously being extended and refined with an emphasis on applications in the medical and healthcare domains.

[1] Accessible at https://eur-lex.europa.eu/lega...

[2] Robuscope: see https://www.iks.fraunhofer.de/...

[3] PARCS: see https://github.com/FraunhoferI...

This work was funded by the Bavarian Ministry for Economic Affairs, Regional Development and Energy as part of a project to support the thematic development of the Institute for Cognitive Systems.