Read next

UN International Year of Quantum Science and Technology

Quantum Computing Comes Knocking on the Door of Business

The United Nations has declared 2025 to be the International Year of Quantum Science and Technology. This is to recall and celebrate the fact that 100 years have now elapsed since the beginnings of quantum mechanics. It is an occasion for a critical review and an optimistic preview of quantum computing technology, including from the point of view of business.

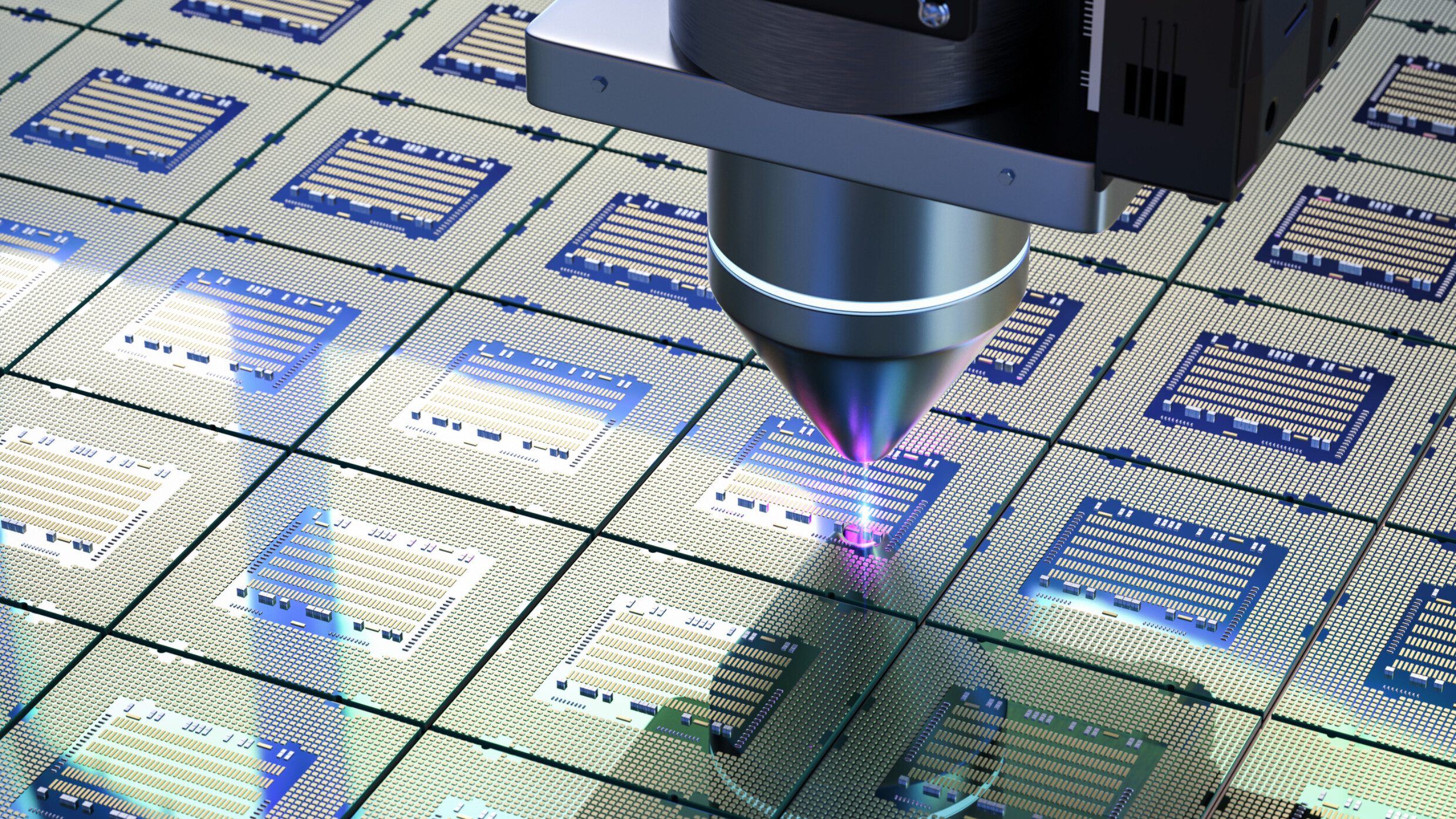

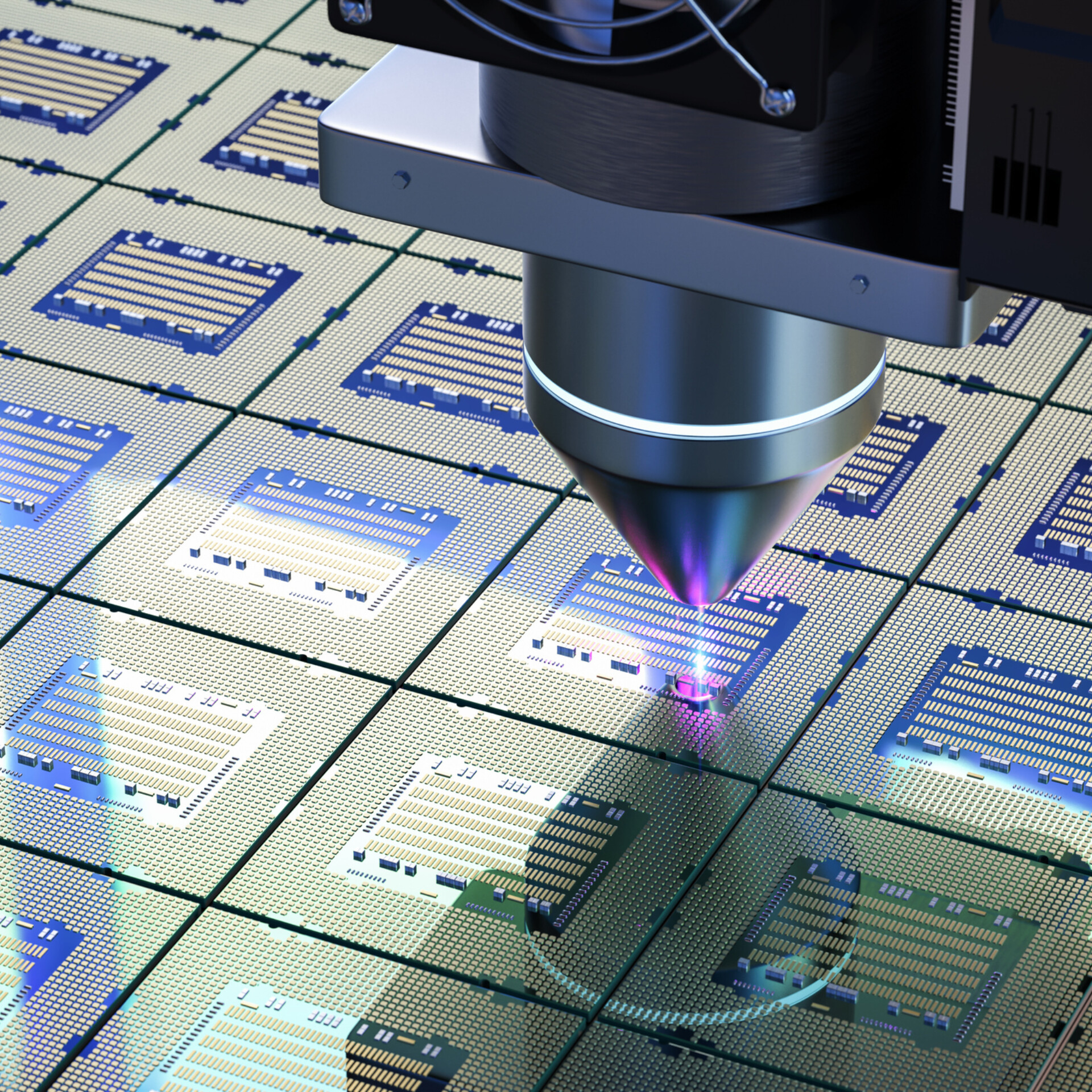

© iStock/Kittipong Jirasukhanont

In 1925, Werner Heisenberg developed the crucial approach that formed the basis of quantum mechanics. But Heisenberg did not elaborate these revolutionary theories all on his own. Rather, quantum mechanics became necessary as a new theory and paradigm shift following some groundbreaking experiments in the preceding years.

They had revealed findings that were impossible to reconcile with physics as it was then known and established. For example, in 1901, Max Planck recognised that black-body radiation is emitted only in so-called energy packets, that is, discrete portions of energy. In 1905, Albert Einstein postulated the photoelectric effect, in which electrons are emitted from materials irradiated with photons.

In the following, physics gained a much more precise understanding of atoms, in particular thanks to Niels Bohr, who between 1911 and 1918 developed a model of the hydrogen atom. In the following years, prominent physicists worked on explanations of the behaviour of atoms and particles, which still later were confirmed experimentally. What showed itself as fundamental to the understanding of atoms and particles was the wave–particle duality, whereby a particle can be a wave as well as a particle. This duality can be observed, for instance, in scattering experiments with particles: unlike in the case of classical particles (such as a pellet from a shotgun), bombarding a double slit with particles (such as electrons) gives rise to interference patterns, which means that behind the double slit there are areas of higher intensity and areas of lower intensity forming a line pattern.

A real revolution in everyday life

The ever more precise understanding of particles thus gained led to the first quantum revolution: by making use of this more precise understanding, it was possible to develop a number of technological innovations on which our modern life depends. This includes the development of transistors and microprocessors, which are fundamental to computers. But our understanding of atoms and particles is also the basis of cameras, satellites, lasers and medical imaging.

It would take a few more decades until science gained a greater understanding of how atoms and particles can be manipulated, that is, controlled. At first, this only happened in laboratories, but in recent years we have seen more and more steps towards industrialising these findings and experiments. Thus, a second quantum revolution is on the way. This understanding makes it possible to develop quantum sensors able to precisely measure very small magnetic fields. Such quantum sensors might find an application in future medical imaging systems that could be used to measure brain waves.

Yet another revolution – and we are right in the middle

Quantum computers are also part of the second quantum revolution. Here, individual atoms or ions (or particles) are controlled into forming qubits (or quantum bits). A qubit requires, first, a quantum mechanical system having two easily distinguishable and preparable states, 0 and 1. These states can be modified by various operations (so-called gates). Although quantum computers were proposed as early as the 1980s, it took several decades until it was possible to build them in laboratories, and still longer for them to be available to external users, such as by cloud access.

Quantum computers make use of the principles of quantum mechanics – in particular, the superposition of quantum mechanical states, the entanglement of states, and interference effects – to speed up certain algorithms, compared to classical algorithms. It must be noted, however, that this “speed-up” initially “only” relates to computation steps, meaning that a quantum computer should require significantly fewer steps to perform certain computations than a currently customary, classical computer – or even the most powerful supercomputer in the world. In recent years, these theories have been driven by a number of groundbreaking experiments: thus, in 2019, Google with its 53-qubit Sycamore quantum chip claimed so-called “quantum supremacy” for a specific computation, which on Sycamore took only 200 seconds, whereas then available supercomputers were estimated to take 10,000 years.

While that claim caused controversy at the time, and better classical algorithms were then proposed, this experiment spawned massive investments into building quantum computers, with the expectation that they would lead to disruptive change in industry very shortly. The most prominent example of this is probably Shor’s algorithm, an efficient algorithm for finding the prime factors of large numbers.

Once this algorithm can in fact be applied to large numbers, currently common encryption methods will be at risk, as they particularly depend on the difficulty of factorising large numbers. But Shor’s algorithm presupposes quantum computers being able to execute very extensive algorithms with a significant number of qubits and many mathematical operations. This is not the case yet, as current quantum computers are still too small and too prone to errors.

Will quantum computers find their way into practical applications?

Besides Shor’s algorithm, it is expected and anticipated that quantum computers will be able to contribute to solving combinatorial optimisation problems in industry more efficiently (relevant to route planning in logistics), to simulating materials more precisely and quickly (relevant e.g. to drug development), and also to significantly accelerating machine learning. Here, quantum computing represents a completely new computing paradigm.

In recent years, thanks to the availability of the first quantum computers, even though small and noisy, more and more prospective applications of quantum computing have been studied and analysed. It is of primary interest here to understand in what areas quantum computers may actually lead to a greater precision or speeding up of computations. This analysis showed that quantum computers are not likely to be generally “better” than classical computers; rather, quantum computers will in future ably assist classical computers, performing computations jointly. This can be imagined as similar to how, today, for certain computations, a graphics processor supports the central processing unit (as in video games).

Classical and quantum computers working hand in hand

In recent years, with quantum computers still small and prone to faults, so-called variational algorithms in particular have been regarded as promising, as they require few resources of a quantum computer. Variational algorithms work in an interaction between classical and quantum computers, such as using the classical computer for updating parameters in the computation on the quantum computer.

Some of the most recent studies, however, have shown that the idea of directly obtaining a quantum advantage by using variational quantum algorithms is a little too simple. This is primarily because even quantum algorithms with few quantum gates are quite susceptible to the noise of current quantum hardware. And to obtain sufficiently good results from variational quantum algorithms, it is still necessary to execute these quantum algorithms in great numbers, meaning many more “shots” need to be performed. These two aspects result in variational quantum algorithms being generally neither “better” nor “faster” than computations on classical computers. In addition, various studies have also shown that quantum computers have relatively low gate speeds, which means that while a quantum computer may require fewer computation steps, it will be rather slow in taking those steps.

Step by step into medium-sized enterprises

What is to be done? All these findings from recent years suggest the conclusion that the use of quantum computers for solving issues in industry must proceed in a very targeted manner: by deliberately using quantum algorithms that do not require too many iterations with classical computers. Furthermore, the different computation steps on classical and quantum computers must be viewed as a complete package and optimised jointly, taking account of the quantum hardware used, which should be adapted to the specific application.

This co-design of hardware, software, and algorithmics is being studied by the Fraunhofer Institute for Cognitive Systems IKS in its internationally pioneering Bench-QC (application-driven benchmarking of quantum computers) project. In the recently completed QuaST (quantum-enabling services and tools for industrial applications) project, a consortium led by Fraunhofer IKS developed the prototype of a level of abstraction designed to ensure that industrial users, and medium-sized enterprises in particular, will not require too deep an understanding of the subtleties of quantum computing but will instead receive a guide to solving their industrial optimisation problem with quantum support.

Fraunhofer IKS will present this solution, packaged in a software tool, the QuaST Decision Tree, at TRANSFORM in March 2025 and at World of Quantum in June 2025.

Yet, all these studies have also shown that quantum computing hardware is still in need of significant further development before quantum computers yield an actual economic benefit. Specifically, this will require so-called error-corrected qubits, making the resulting quantum computers less susceptible to faults and noise and thus able to perform longer computations. In the past year, various universities and companies have made significant progress towards implementing such error-corrected qubits, which gives cause for hoping that fault-tolerant quantum computers will indeed be available in a few years’ time. One example is the recently published experiment with Google’s Willow chip.

From an application point of view, therefore, the prospects for 2025 overall are encouraging. Along with an increased understanding of what applications will be relevant or not relevant for quantum computing, there is also continuous and quite rapid progress being made on quantum computing hardware. Thus, business and science may have more than a hope that the second quantum revolution will find specific applications in the coming years. Your pizza delivery, however, will still more likely be sent on its way using a classical computer than by using a quantum computer.