Read next

Machine Learning

A quick look at safe reinforcement learning

Reinforcement learning (RL) is one of three main machine learning (ML) paradigms. Unlike the other two, supervised learning and unsupervised learning, in reinforcement learning the learning takes place in direct interaction with the surroundings. A brief introduction follows.

© iStock.com/nedomacki

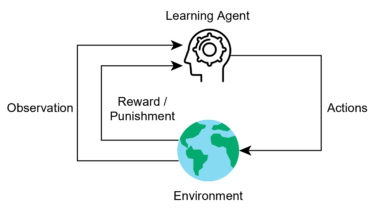

In the Reinforcement learning (RL) framework, an agent observes the environment and chooses an action it deems appropriate to its observation of the given situation in this setting. The environment then sends a feedback signal called a reward to attach a value to this action. This reward allows the agent to determine whether its decision was good and should be repeated in similar situations or whether it was a bad decision with an undesirable outcome that ought to be avoided.

© Fraunhofer IKS

The components of the reinforcement learning framework

Take, for example, a mouse trying to find a piece of cheese on the far end of a maze. If this was a reinforcement learning (RL) problem, the observation would be what the mouse sees, the action is its decision over which direction to choose, and the reward is the snack at the end of the labyrinth.

This concept is not new, but it has seen something of a revival in recent years as it can serve to solve problems where there are too many conceivable states to feasibly compute every possibility. One such scenario is an artificial intelligence playing an Atari game. Another made headlines when an artificial intelligence (AI) defeated professionals at the game of Go [1].

How to make reinforcement learning safe

Although RL has achieved good results in complex applications, it is certainly not the go-to method for safety-critical applications. For one, the AI has to apply the learned policy safely which is difficult to guarantee for every situation. Furthermore, the agent has to explore unfamiliar states to learn from an environment. The usual exploratory strategies rely on the agent occasionally choosing random actions. This is a simple but effective way of compelling the agent to experience novel situations while following a policy, even if is clearly unsafe in the given setting. Learning in real-world safety-critical systems would therefore require an exploratory algorithm to ensure safety, which is why this type of learning experience is largely confined to controlled scenarios and simulation software.

To close this gap, some safe RL methods have been proposed. They feature algorithms that factor various safety aspects into the equation. There are two categories of safe RL methods – safe optimization and safe exploration [2].

Safe optimization algorithms make safety aspects part of the policy itself. The optimization criteria can incorporate constraints to guarantee policy parameters will always remain within a defined safe space. These algorithms can accommodate a measure of risk, whereby risk is usually defined as the likelihood of experiencing unsafe situations. It is important to note that these methods do not necessarily enforce safety during training and often assume that the agent will enter into unsafe states before learning a safe policy.

Safe exploration is all about changing the exploratory process to prevent the agent from choosing unsafe actions during training. One option is to draw on external knowledge to guide the agent during its exploration. This could be an initial briefing specifying unsafe regions that the AI may not enter during exploration. Or it could be a human teacher or other decision-making entity advising the exploring agent.

Another way of safely exploring the environment is to use risk as a metric. In contrast to the risk-centered approach to optimization discussed above, this risk metric does not constrain the agent. Instead, it serves to guide the agent to avoid risky situations while learning.

What is deep reinforcement learning?

A common solution to the complications of dealing with a large number of states is to integrate a function approximator in the agent. This is a necessary workaround as there are many action-state pairs involved in dealing with large problems, and it is just not feasible to have an agent learn the value of every pair.

Deep neural networks (DNNs) have served to solve high-dimensional machine learning problems such as in computer vision applications. A DNN can do the same for Deep RL when used as a function approximator to extract features and learn how to control agents for problems of such magnitude. Combining classical RL with Deep Learning in this way is then called Deep Reinforcement Learning.

This combination of DNNs and RL lends itself to more use cases, but it also comes with the same caveats that the machine learning community has had to contend with when using deep models in safety-critical applications. Machine learning systems are vulnerable to adversarial attacks, and RL policies can be hacked in much the same way [4]. This is why agents have to be inherently robust to adversarial attacks.

Agents’ robustness is not the only stumbling block. Uncertainty estimation and explainability also need to be improved before RL models can be widely accepted as a safe decision-making alternative [5], [6].

Towards intelligent decision-making agents

The RL research community is working to solve these problems and transcend the limitations described above. The day is approaching fast when safe RL methods will give rise to intelligent decision-making agents that solve complex tasks in real-world applications by choosing only actions that do no harm or damage.

Safe RL is a focal point of research at the Fraunhofer Institute for Cognitive Systems IKS. For one, we aim to develop uncertainty-aware agents that will be able to estimate accurately and make sound decisions in uncertain environments. For the other, we are striving to build training models that are able to generalize and perform safely in environments other than training scenarios, the idea being to ensure safety even when the agent has to make decisions in unfamiliar situations.

[1] https://www.theguardian.com/te...

[2] Garcıa, Javier, and Fernando Fernández. "A comprehensive survey on safe reinforcement learning." Journal of Machine Learning Research 16.1 (2015): 1437-1480.

[3] Alshiekh, Mohammed, et al. "Safe reinforcement learning via shielding." Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 32. No. 1. 2018.

[4] Gleave, Adam, et al. "Adversarial policies: Attacking deep reinforcement learning." arXiv preprint arXiv:1905.10615 (2019).

[5] Clements, William R., et al. "Estimating risk and uncertainty in deep reinforcement learning." arXiv preprint arXiv:1905.09638 (2019).

[6] Fischer, Marc, et al. "Online robustness training for deep reinforcement learning." arXiv preprint arXiv:1911.00887 (2019).

This activity is being funded by the Bavarian Ministry for EconomicAffairs, Regional Development and Energy as part of a project to support the thematic development of the Institute for Cognitive Systems.