Read next

Artificial neural networks

Computer vision involving less data needs to be learned

Compared with the human brain, artificial neural networks consume much more power — even when completing simple discrimination tasks. Event-based vision offers a promising approach to solving this problem. Yet there are still a number of challenges to overcome.

© iStock.com/Alcuin

Computer vision (CV) is an extensive field of research that investigates how computers extract meaningful information from digital images or videos in a way similar to human vision. The key technology used in this field is artificial neural networks (ANN), which are able to solve a wide variety of tasks with the use of a learning algorithm known as frame-based vision. Frame-based vision is already used to great success in industry, but its energy consumption is several times higher than that of a human brain: relatively simple tasks, such as distinguishing between a dog and a cat on the basis of pictures, require more than 200 W, whereas the human brain is able to comprehend far more complex topics with just 20 W.

In recent years, a new field of research has emerged to address this issue: event-based vision, which takes cues from human biology. Read this blog post to learn more about event-based vision and how it can help solve CV tasks more efficiently.

Image-based vision

Widely-used imaging cameras process incident light over a specific period of time in order to produce digital images. These images are then read by ANNs at a specific frequency in order to recognize patterns and solve various tasks. For example, these tasks may involve identifying different objects (object recognition), both detecting these objects in an image and localizing them (object detection) or drawing precise boundaries to delineate an image from its background (semantic segmentation).

Although considerable progress has been made compared to older, conventional image recognition methods, the learning and completion of these tasks consumes a significant amount of energy: Image sequences contain a lot of redundancy, as the information from all the pixels has to be stored and processed for each image, even if only a small area changes. In real-time applications in particular, such as robotics or autonomous driving, the required computing power can make it difficult to process the continuously changing environment quickly and efficiently in certain circumstances.

Vision based on event data

Event cameras

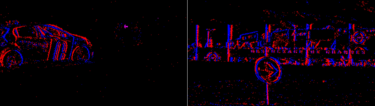

Computer vision based on event data is a new approach which, inspired by how human vision works, doesn’t suffer from the same problems. For example, the human eye is very sensitive to rapid changes, whereas the brain ignores stationary objects. Event cameras make use of this mechanism and measure the local temporal change of brightness per pixel point independently and asynchronously to other pixel dotes. Instead of a sequence of images with a constant frequency, the output is a stream of events whereby each event encodes the time, location and initial sign of the light change (see Figure 1).

The advantages of this form of information processing are very low latency in the microsecond range, very low power consumption averaging a few milliwatts and a wide dynamic range

(brightness differences of 140 decibels do not pose any issue) [1]. Conventional image cameras, by comparison, consume several watts on average and their latency time of over 100 microseconds is also significantly higher than that of event cameras.

© Fraunhofer IKS

Fig. 1: Part of a scene from the event-based dataset for autonomous driving, created by [5]. A stream of events over a time span of 100 milliseconds is visualized. Red or blue pixels indicate a local increase or decrease in brightness.

Spiking neural networks

The high energy efficiency of the human brain is primarily the result of its impulse-driven communication. These impulses are sparse and asynchronous, just like the events of an event camera. As the format of event data is fundamentally different from that of image data, it is necessary for alternative technologies to be developed that are able to efficiently process these event data characteristics and thereby use the considerable potential of event-based sensors.

One possibility is to use a new generation of ANNs called spiking neural networks (SNN), which reproduce the structure and dynamics of biological neurons far more accurately than ANNs. In general, ANNs are also inspired by the human brain, which consists of billions of neurons. However, they differ from biological networks in fundamental areas, such as information processing and their learning methodology. Information between two artificial neurons is forwarded immediately without any consideration of time in the form of real values (see Figure 2, left). Spiking neurons, on the other hand, communicate by means of impulses (spikes), which they receive and process asynchronously and independently of each other. Information concerning both the number of spikes over a given period of time and the exact timing of the spikes is transmitted (see Figure 2, right).

However, not all SNNs are the same. Depending on the degree of abstraction, the individual neuron models differ in terms of their complexity: examples include the Leaky Integrate-and-Fire (LIF), the Spike Response or the Hodgkin-Huxley model. The most commonly used neuron model is the LIF model with dynamics that are modeled by differential equations. As its input, the LIF neuron receives binary pulses over time which affect its internal membrane potential. If only a few pulses reach the neuron, the potential falls again. If enough pulses reach the neuron within a short period of time causing the potential to rise above a defined threshold, the neuron in turn emits a pulse which travels to subsequent neurons. These dynamics make SNNs inherently asynchronous and sparse, making them ideal event data processors.

© Fraunhofer IKS

Fig. 2: Left: Artificial neuron which forms a weighted sum (f) of three real values and a bias value and applies a non-linear function (f) to these values.

Right: A spiking neuron which receives three weighted pulse currents that contribute to an internal membrane potential (). A pulse is generated when the potential exceeds a predefined threshold value (f).

Neuromorphic processors

Another component of computer vision based on event data is neuromorphic data processing on specialized hardware (neuromorphic processors). Like event cameras and SNNs, neuromorphic processors aim to align themselves more closely with the computing power of the human brain by attempting to imitate its structure and function. One advantage offered by neuromorphic chips is that they enable events to be processed without an intermediate step. Thanks to their design, neurons and synapses (links between neurons) can be directly implemented onto a chip to carry event-driven electrical signals of varying strengths.

Event-driven information processing means that only the sub-areas in the system influenced by an event are addressed. All other sub-areas are inactive and do not therefore consume any energy. This makes neuromorphic processors into expansive hardware accelerators for SNNs and the ideal data processors for event cameras, similarly to GPUs for ANNs and image cameras.

In summary, the term event-based vision covers an entire pipeline for the information processing of event-based data. Due to its energy efficiency and low latency, and when applied in its entirety, event-based vision can be used to run AI algorithms on edge devices instead of in the cloud, making intelligent systems faster and more secure.

A success story with obstacles

With all these advantages, however, the question arises as to why computer vision based on event data isn’t the talk of the tech world. Perhaps one reason is the lack of any efficient and convincing methods for training SNNs to date. The non-continuous character of the pulse function means that it is not differentiable, meaning the back propagation algorithm, which has already driven the success of ANNs, cannot be directly applied.

Three different approaches are currently available for training SNNs: One approach is to convert ANNs, which is arguably the most successful in terms of performance. However, several time steps are required for the information processing, which significantly reduces energy efficiency. Another approach is to use approximations of the back propagation algorithm.

Various projects have already achieved some success in the field of gesture recognition and pose determination, and also in more complex tasks such as object detection. The most biologically plausible approach is unsupervised, local learning methods based on the principle of spike timing-dependent plasticity (STDP). However, as things currently stand, only very flat SNNs can be trained in this way, and with limited accuracy.

Another issue is the lack of a uniform and user-friendly software framework. These days, ANNs are very easy to design and train, and established frameworks such as PyTorch or TensorFlow provide an extensive construction kit for this purpose. However, there is still a lack of uniform frameworks for SNNs which can be used by researchers on a continuous basis. Examples of commonly-used frameworks include LAVA-DL/SLAYER[2], SpikingJelly[3], or Norse[4], although these differ greatly in terms of the implemented neuron models, learning algorithms used, user-friendliness and the support of an interface for the hardware.

The limited availability of the hardware is also a major problem. Although there are some suppliers of event cameras (for example, Prophesee, iniVation, Samsung) and hardware implementations of neuromorphic chips (for example, Loihi/Loihi2, SpiNNaker, Akida), they are either impossible to buy or their purchase price vastly exceeds that of conventional hardware. This makes it difficult to create the data sets that are required for learning a wide range of tasks. Some approaches address the conversion of images to event data, but the high temporal resolution is lost. That being said, the initial comprehensive data sets in the field of autonomous driving [5, 6] are showing a positive trend.

In addition, SNNs currently tend to be trained and tested on GPUs, which is associated with high costs and makes the development of complex architectures difficult. Fraunhofer IKS is currently conducting research in partnership with other Fraunhofer institutes on the scaling of SNNs to efficiently implement highly complex tasks such as object detection for event data.

[1] Gallego, Guillermo et al. “Event-Based Vision: A Survey.” IEEE Transactions on Pattern Analysis and Machine Intelligence 44 (2022): 154-180.

[2] Lava DL (2021), GitHub Repository, https://github.com/lava-nc/lava-dl

[3] SpikingJelly (2020), GitHub Repository, https://github.com/fangwei123456/spikingjelly

[4] Pehle, Christian, and Pedersen, Jens Egholm, Norse - A deep learning library for spiking neural networks (2021), GitHub Repository, https://github.com/norse/norse

[5] Perot, E., Tournemire, P.D., Nitti, D.O., Masci, J., & Sironi, A. (2020). Learning to Detect Objects with a 1 Megapixel Event Camera. ArXiv, abs/2009.13436.

[6] Tournemire, P.D., Nitti, D.O., Perot, E., Migliore, D., & Sironi, A. (2020). A Large Scale Event-based Detection Dataset for Automotive. ArXiv, abs/2001.08499.