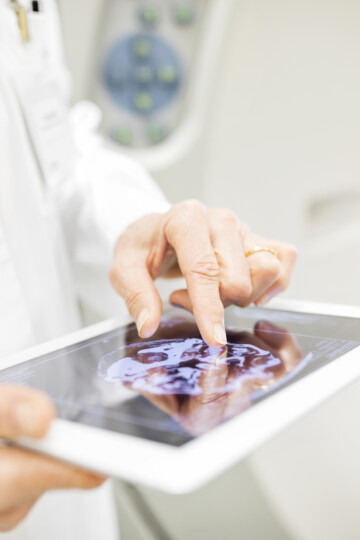

Interview with Mario Trapp and Florian Geissler

»We combine the strengths of GPT with those of established safety tools«

The new Fraunhofer IKS Safety Companion is an AI agent designed to support human developers with safety-critical tasks. It uses large language models (LLMs) to understand tasks and to identify and implement the appropriate safety concepts. Executive Director Mario Trapp and Florian Geissler, Senior Scientist at Fraunhofer IKS, introduce the Safety Companion in an interview.