Read next

The Story of Resilience, Part 2

The Complexity Challenge

Resilience is the key ingredient for the next generation of cognitive cyber-physical systems. After all, many visions of the future can only become reality if the systems become aware of themselves, their goals and their context in order to be able to adapt to unknown and changing environments. And in doing so always optimize utility while preserving safety. But resilience can only be bought with increased complexity. In this article, part 2 of our series on resilience, we look at the character of complexity to better understand where to start engineering in order to manage this complexity.

© iStock/lindsay imagery

Understanding Resilience

Did you miss the first part of our series? Read it here:

Most of today's cognitive cyber-physical systems must operate in highly dynamic, hard-to-predict environments – despite the inevitable uncertainties that this entails. The days when systems could be squeezed into the static, rigid, very constrained mold of a fully specified context are over. Therefore, most engineers intuitively feel that it would be futile to statically predict all contingencies at development time and develop exactly the one solution that will work in all conceivable and unthinkable cases.

This is why the basic idea of resilience is already found - mostly unknowingly and implicitly - in many systems today. However, resilience and the complexity that comes with it must be mastered. Implicit ad hoc approaches will not scale, will lead to a massive increase in complexity and thus inevitably to massive quality problems.

Resilience is based on the idea that systems become aware of their goals and their environment and adapt themselves to optimize their goal achievement. To this end, you have to teach the system in some way in which context it needs to adapt in which way. Simple if-then-else rules quickly reach their limits for obvious reasons. The infinity of all environmental situations cannot be squeezed into a finite list. Simple rules like "If you see parked trucks at the side of the road, slow down (because people could be overlooked)" therefore do not scale.

Complicated, complex or chaotic?

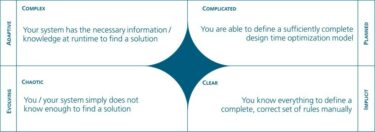

As complexity plays a major role, it is important to look at it in a more informed way. To this end, we can make use of the Cynefin framework [1] which was actually developed by David Snowden as a conceptual framework to support decision-making processes. As such, it helps us to better understand which classes of problems we as humans can process in which way. If we transfer the framework to the development of resilient systems, we get the picture shown in Figure 1.

If we know everything about the problem and can establish clear and unambiguous if-then rules to control the problem, then it would fall into the lower right quadrant "clear". If one can correctly name the complete set of all necessary and sufficient rules, and only then, it is possible to hard-code resilience adaptation rules into a system without further methodological underpinning.

© Mario Trapp

Figure 1 - The Cynefin framework [1] applied to resilience

As mentioned before, in practice this will only be possible in very few cases. First, you can only simplify the context to a certain degree without ending up with a practically useless system. Second, it would not be sufficient to restrict the system’s flexibility to such a small number of system-level operating modes that you can manage them with ad-hoc adaptation rules. In order to gain sufficient flexibility, resilient systems adapt individual components in a modular way and the configuration of the overall system will result from the combination of component configurations. In addition, one must consider the switching process, because in most cases a system cannot simply stop, adapt completely, and then start again. Instead, it must adapt the individual components on the fly - in the correct sequence and always keeping itself in a consistent state.

This results in many interrelationships and dependencies that are beyond our human cognitive capacity. Therefore, if we still tried to use simple rules, inconsistencies and thus errors would inevitably occur. The Cynefin framework would speak here of a complicated problem, which is represented by the upper right quadrant. This means that all the key relationships are known, but no solution can be found without a high level of expertise and sound analysis. For resilience, this means that one needs an analytical model that can be used to determine optimized and safe adaptations. In this case, we would speak of planned adaptation.

© Fraunhofer IKS

Prof. Dr.-Ing. habil. Mario Trapp: "Many visions of the future can only become reality if the systems become aware of themselves, their goals and their context."

On the basis of such a model, all adaptation rules can already be determined during development and "only" have to be checked, selected and implemented by the system at runtime. However, without a corresponding model for analysis and optimization, i.e. without engineering, the problem cannot be adequately solved. On the one hand, this kind of planned adaptation still limits the flexibility. On the other hand, however, systems have to simplify their context to a set of parameters that they can sense, anyway. And at least for the time being, it is unrealistic that systems start to continuously modify themselves, but adaptation is limited to changing the system’s architecture and parameters within a predefined configuration space. Moreover, for obvious reasons, planned adaptation has the huge advantage that all possible system configurations are known and can thus be verified at development time. Furthermore, such analysis models allow us to reduce the configuration space to those configurations that have the greatest impact on the system’s utility.

Traditional engineering is reaching its limits

Resilience based on planned adaptation can still be mastered with classical engineering. With the new generation of systems, however, we are reaching the limits of "pre-calculation" and thus the limits of traditional engineering paradigms. While it would be theoretically possible to build an analytical model in many cases, it is simply not practically possible to predict, describe, and analyze the context in its infinite facets at the necessary level of detail.

Therefore, the analytical model would have to be simplified and abstracted to such an extent that the flexibility and thus the usefulness of the system would be restricted too much. Hence, we often end up in a situation where it is either impossible or not (economically) reasonable to statically analyze all cause-effect relationships.

That’s the point when we have to acknowledge that the context becomes complex so that we’re now in the upper left quadrant of the Cynefin framework. That’s also the point when we must move from the realm of planned reconfigurability into the spheres of adaptive systems. Or in other words, we move from classical engineering to self-adaptive systems by enabling the systems to take over engineering tasks themselves. They must become “aware” of their goals, their own state, and of their context in order to adapt themselves to the current situation.

In the first place, from a practical perspective, this mainly means that we enable the system to interpret the current situation and to find an optimized configuration at runtime itself. Even though we “only” shift the analysis to runtime, this already leads to many advantages: First, this tremendously reduces the analysis space as it is not necessary to analyze any possible situation, but it is sufficient to consider the very concrete situation at a given point in time at runtime. Consequently, we can use much more precise analyses. Second, it is not necessary to derive a complete solution space, but it is sufficient to find one solution at a time for the current situation. Third, the analysis does not need to be performed based on static (worst case) assumptions, but it can use the actual parameters available at runtime so that many uncertainties can be resolved and the result can be much more accurate.

Analysis models at the resilience level are conceptual models

Particularly for unknown unknowns, however, such an adaptive approach reaches its limits. The runtime analysis is limited to the concepts and their interrelationships that we integrated into the analysis model. It is, however, important to note that in this case we are not talking about ML-related (Machine Learnimg) problems such as driverless cars hitting a camel just because the ML-based object detector was not trained for camels. The analysis models we use at the resilience level are conceptual models and do not need to know the exact concept of a camel for identifying that there is a solid physical body and that it is physically impossible to drive through a solid body (no matter if it is a camel, a horse, a tree or an alien spaceship).

DevOps will play an increasingly important role – also for engineering resilient systems.

Executive Director of the Fraunhofer Institute for Cognitive Systems IKS

Nonetheless, we all know that there will be situations, where the physical and other concepts that the system knows are simply not sufficient. Things that systems are not capable to sense in their context, or concepts, or interrelationships they don't understand, limit their ability to adapt to the context. In the Cynefin framework, we have then reached the chaotic area at the bottom left. As humans, we would have to act somehow in such situations, observe how the world reacts to it, and gradually try to learn new concepts from it.

For cognitive systems, however, this kind of learning new concepts on a semantic level will remain elusive for the foreseeable future. For a technical system there is no other chance in such an unknown situation than reacting in a safe way – even though this might mean a complete emergency stop. But the systems can then inform their human engineers that they have reached their limits. Following the ideas of continuous engineering or DevOps this means that the system lets us know that it encountered unknown situations where it needs additional support. Combined with approaches such as shadow-mode operation, where the subsystems run already in the field without an active influence on the main system, they can even trigger an improvement before they are deployed as part of the product. Thus, in addition to the loop of self-adaptive systems, there is a higher-level loop where we humans take over for evolving the system by extending/modifying its resilience models.

Summary

Our human cognitive abilities have clear limits. Only for very clear problems, where we know exactly what to do in an if-then manner, we can hard-code exactly these rules in software. In most cases, however, the problem is at least complicated, i.e. we need analytical models to help us understand the relationships and find optimized solutions. We therefore need dedicated engineering methods. Currently, we are in a phase in which even such classical engineering approaches are no longer sufficient. The context in which the systems have to function is too unpredictable, too complex. In order to master such complex systems, they have to be adaptive. They have to adapt themselves, i.e. they have to complete some of our engineering tasks at runtime, which would be too complex for us at development time.

However, since they can still only adapt based on the concepts that we have taught them, adaptivity also has clear limits. Once these are reached, a chaotic problem arises. The chaos can only be mastered by us humans, by giving the system a broader understanding of concepts and thus reducing the chaotic problem back to a complex problem that the system can master.

All of this points to the major challenge of resilient cognitive systems: classical engineering will not scale. Instead, we need self-adaptive systems that can, within limits, engineer themselves at runtime to optimize their utility in a given context without violating safety requirements. And approaches such as DevOps will play an increasingly important role – also for engineering resilient systems.

References

[1] D. Snowden, "Complex acts of knowing: paradox and descriptive self-awareness," J. Knowl. Manag. , vol. 6, no. 2, pp. 100-111, 2002.

Read the third part here: The Story of Resilience, Part 3 – Understanding Self-Adaptive Systems