Read next

Flexible and dynamic systems

Dynamic safety assurance in end-to-end architectures

When a system malfunctions or a required service is not provisioned in a timely manner, this can lead to human injuries or fatalities. These situations are considered safety-critical, e.g. in automated driving and human robot interaction. For this reason, the systems that are involved must exhibit a high degree of dependability. To determine a system’s dependability, scientists at Fraunhofer IKS are developing an adaptive, dynamic and flexible safety analysis process that overcomes the limitations of current methods.

© iStock.com/Gumpanat

Dependability is the capability to avoid failures that are more frequent and more severe than what is deemed acceptable. Among other techniques, the most frequently used approach for dependability analysis is the fault tree analysis (FTA). However, the manual nature of the FTA makes the analysis process intricate, time consuming and costly. Moreover, the analysis is conducted on informal system models, resulting in inconsistencies and discrepancies that lead to inaccurate evaluations of the system dependability.

Model-based dependability analysis

For many years, researchers have attempted to alleviate the workload of the dependability analysis process by automatic synthesis of dependability-related data from system models. This resulted in the emergence of the field of model-based dependability analysis (MBDA). Since the system models undergo continuous changes and modifications while being designed, MBDA offers a compelling advantage over traditional methods. This approach enables a fast iterative analysis of the interim versions of the formal models that provide more data. The result is that the dependability analysis results synchronize with the evolving system design and its respective performance characteristics record. Reusing parts and components from the previous version of the system model streamlines the process even further.

MBDA still lacks several key features however. This includes the capability to perform dynamic dependability analysis as well as the uncertainty quantification approach for automated analyses. Such automation is relevant for developing a fine-grained system blueprint during the design phase with adaptive multivariate safety verification. The aim here is to achieve the best possible safety-to-performance balance using the available resources prior to developing the system. Secondly, the dynamism and formalization of safety algorithms is crucial for fluent End-to-End (E2E) architectures. This is tied to the fact that the states of the architecture constantly change during runtime, which requires safety monitoring and the enforcement of policies based on predefined KPIs.

Conference Paper, 2021

Towards Comprehensive Safety Assurance in Cloud-based Systems

Publica.fraunhofer.deAdaptive safety analysis process

At Fraunhofer IKS, we are striving to bridge the above-mentioned limitations by genuinely complementing safety analysis MBDA tooling with additional algorithms that will make the process adaptive, dynamic and flexible. In this blog post, we present an introduction to the steps of the process – adaptive safety analysis that paves the way to dynamic and fully automatic run-time safety control.

1. System modeling

System models typically include all elements essential to performing extensive performance-oriented analyses, leaving safety engineers to complete the safety analysis using manual methods for the most part. Automation can be enabled by effortless conversion of the performance-oriented model into the safety model with additional provisioning of the numeric information required for the safety analysis. If the system architecture or behavior changes, the safety part of the model is updated as well. The model is supplemented with the generator (translator) of the code specific to one of multiple MBDA tools or platforms that are available. The model can then be handed over to a safety simulation environment and run through analysis cycles without having to deal with the specific syntax and safety constructs.

Additionally, it is more beneficial to perform mathematically complex calculations at this step and assign the result under an aggregated number designated to one of the safety system components. This minimizes the complexity of any further safety modeling that might be required, leads to a transparent process and ensures that the required calculations are not limited with MBDA tool calculation and representation features.

Although safety analysis automation requires additional effort at the beginning of the design, it reduces the time required for iterative emergence of the constantly varying system design. This approach also decreases the probability of human mistakes and helps to identify correlations between safety and performance models, which can support better understanding and improved models.

2. Safety modeling

Safety tooling is a main pillar of the process. Here, the process starts from the accommodation of the interpreted code generated earlier into the working environment of the tool. Once the data is imported from the modeling step, the tool is intended to be capable of visualizing the entire system design without additional effort. If there is no need for fine-tuning or corrections, the model can be transferred directly to the simulation phase with subsequent automatic generation of the FTA tree and table. The simulation data is preliminary examined by the tool using its visualization features, then exported and passed to the last step of the process chain. It is worth noting that this step can be used independently of the upstream and downstream steps in stand-alone mode with manual definition and drawing of the system parameters and components using tool-specific syntax.

3. Data analysis

In this step, which is referred to as post-processing, the data from the safety simulation is thoroughly analyzed. The main goal here is to automate formal safety compliance verification of the model under test. The user should avoid manual effort whenever a new version is introduced. Instead, the functions have to carry out an automatic or semi-automatic formal verification against the predefined safety goals and requirements. The results should indicate to what extent the system deviates from the desired service level agreement.

Other functions, relying on high-resolution quantitative analysis, should be capable of identifying the potential weaknesses in the system architecture by capturing bottlenecks and suggesting possible ways to mitigate them in subsequent versions of the system model. Another important part of this step is the optimal configuration finder. Based on previously produced quantitative sets, optimization algorithms and statistical methods, corresponding functions must provide an optimal component configuration, their minimally acceptable reliability values, permissible modes of operation and optimal subsystems utilization pattern. All of this has to take place under different loads and unavailability while maximizing system performance and satisfying minimum safety requirements at the same time.

© Fraunhofer IKS

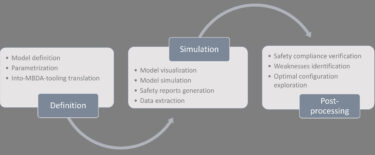

High-level view of the automated safety analysis process.

Dependable Connected Systems

Process summary

All of the key features of the entire process are illustrated in this figure. This preview does not represent a final and exhaustive set of the proposed fluent safety approach capabilities. The framework is flexible enough to accommodate additional design and analysis options depending on the project.

This post is intended to show the overall philosophy of the adaptive and flexible analysis, which can easily accompany any technical system design process throughout its life cycle. The process takes a step further toward dynamic dependability analysis as well as an uncertainty quantification approach. During the design phase, it is important to rely on a framework that automatically translates the performance-oriented system model into the safety model. The subsequent multifaceted formal analysis can inject relevant fine-grained data matrices into the original model for verification purposes. This verification, alongside the performance model, enables automatic system adaptation and modification. Further simulation demonstrates how safety-relevant changes affect the performance.

Jointly processing the performance and safety data makes it possible to find the best safety-to-performance compromise at the end of the design process. Putting this process into practice, we also develop and test relevant candidate algorithms and metrics that will facilitate the creation of fluent E2E-architectures for safety-critical applications in the future.

This activity is being funded by the Bavarian Ministry for Economic Affairs, Regional Development and Energy as part of a project to support the thematic development of the Institute for Cognitive Systems.